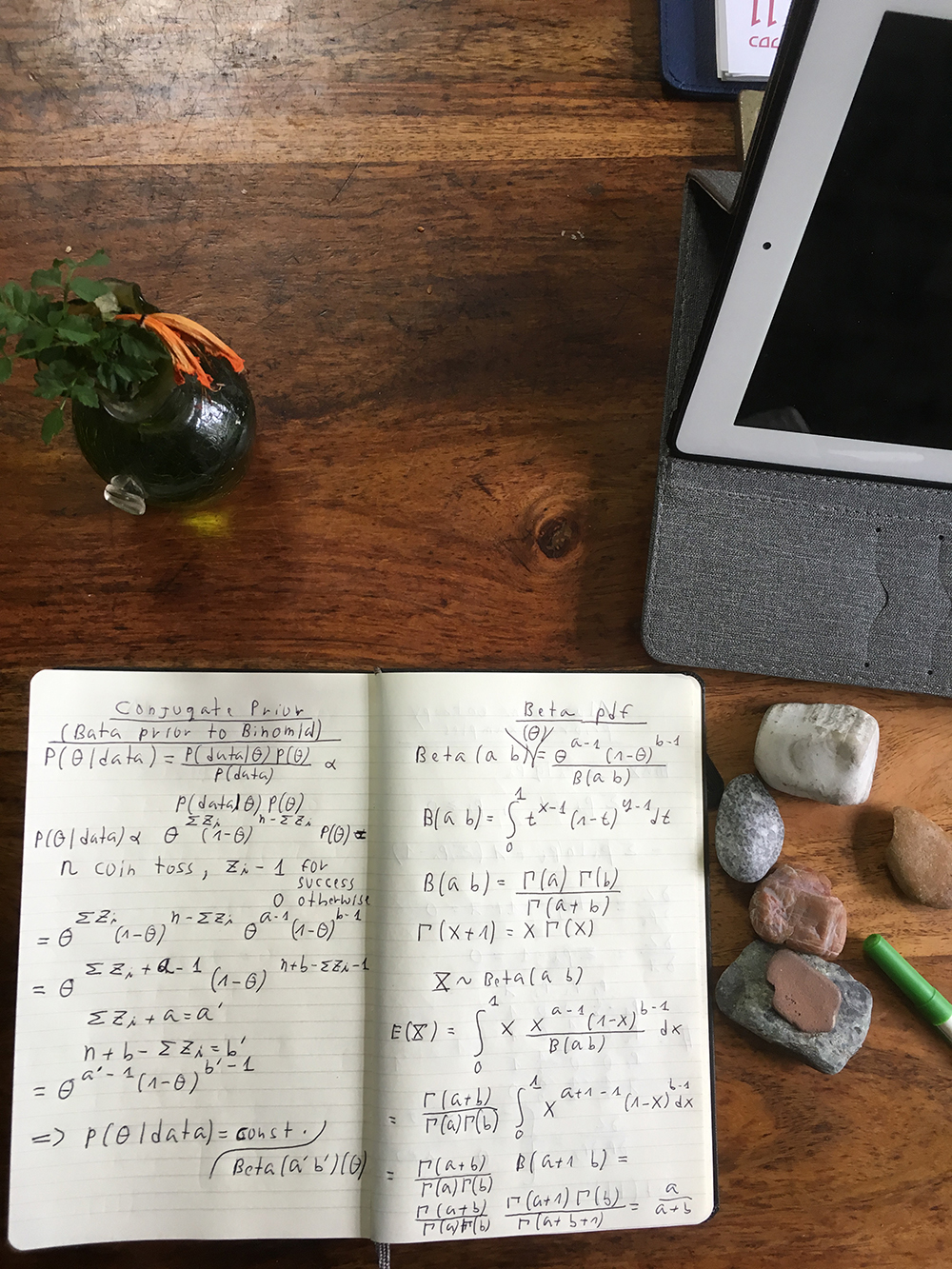

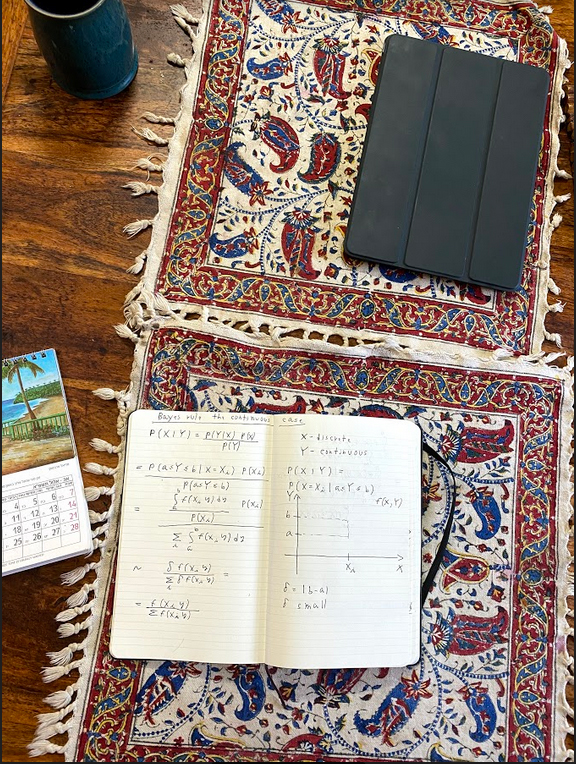

Under the Bayesian setting, probabilities represent our belief on the state of the world, which we can update incrementally after each experiment. This naturally leads to sequential processes.

How to choose priors?

If the Bayesian framework is accepted, choosing a prior well becomes an important step of capturing domain knowledge. The pitfall to avoid is actually capturing things that you don't know. The maximum entropy principle is aimed at avoiding that. Chapter eleven of E.T. Jaynes' book "Probability Theory: The Logic of Science" covers the maximum entropy principle for choosing priors and gives it an axiomatic treatment. See my summary slide.

Additional resources include an MIT lecture (starting in the 8th minute to the 35th). "It's the humble way of assigning prior probabilities". It's a fun lecture! If you like it, see the full course information here.

See a presentation on different ways to choose priors that covers, among other things the principle of maximum entropy under constraints. See another presentation here.

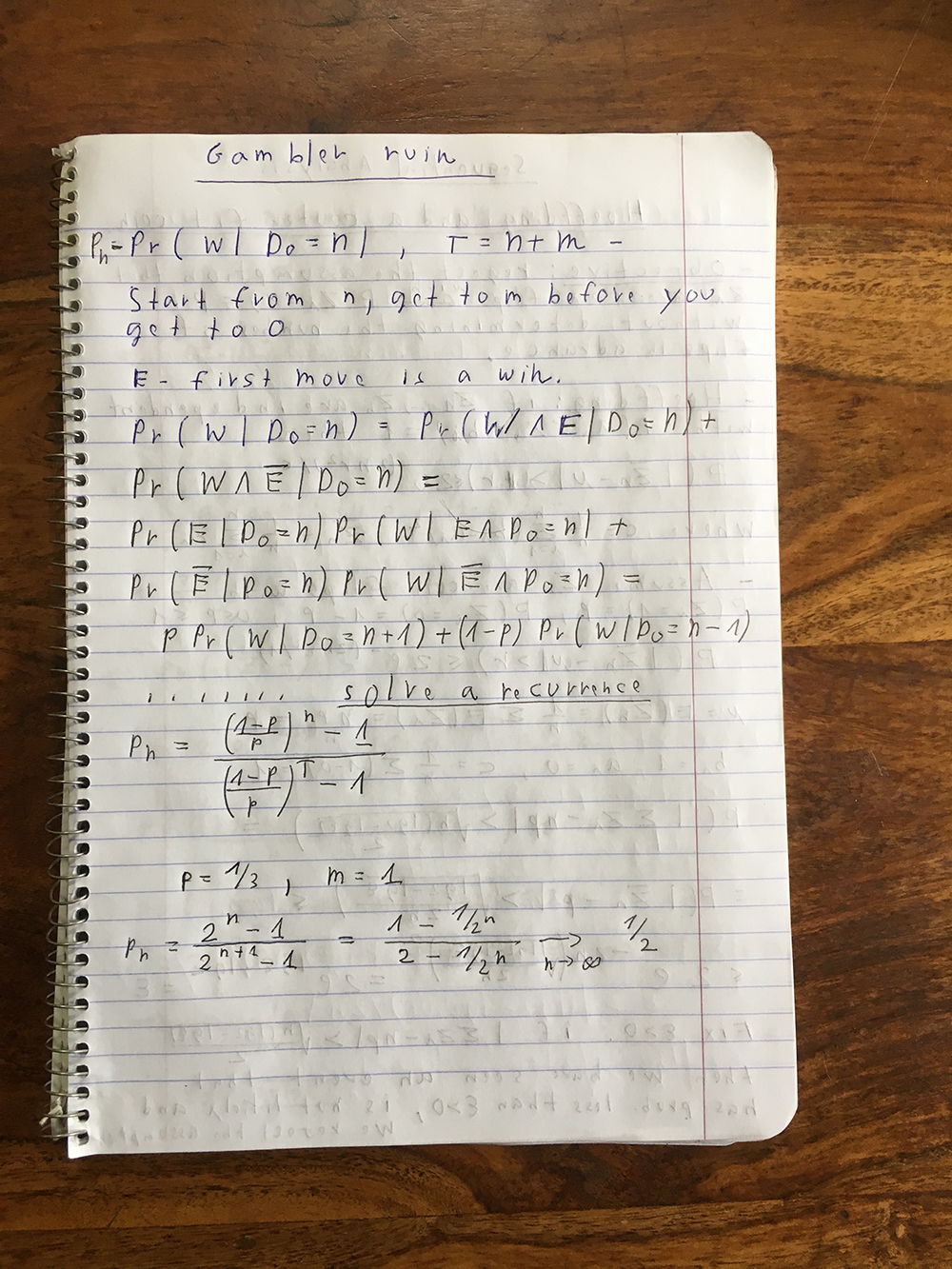

Here we'll discuss the gambler ruin: