Q & A

About Dr. Samuel Ackerman

Dr. Samuel Ackerman is a data science researcher at IBM Research, Haifa. Currently, he is focused on statistical and ML analysis for improving hardware verification systems, and providing general statistical consulting within IBM. Sam received his Ph.D. in statistics from Temple University in Philadelphia, PA (2018), where his thesis research involved applying Bayesian particle filtering algorithms to analyzing animal movement patterns. Previously to IBM, he worked as a research assistant at the Federal Reserve Board of Governors (Washington, DC), and as an instructor in business math at Temple.

Sam welcomes any feedback on the topics discussed below, as well as any suggestions for questions or topics people would like to see discussed, especially if they could be beneficial to the general IBM community. Please use the email link to the right.

Question 01: I have a sample of values of a random variable. I want to conduct statistical inference on the variable based on the sample, for instance to construct a confidence interval for the value or estimate its distribution, while making as few statistical assumptions as possible. What can I do?

Answer:

We have a sample of values of a random variable. The beginning assumption we must make is that the values are iid (independent and identically-distributed). The independence assumption is standard and fairly reasonable. These assumptions mean that the sample values are actually representative of the distribution of the random variable (which is unknown, but must be inferred from the sample).

A confidence interval is an interval of values of the variable (typically two-sided, such as (-1.5, 6.5)) with an associated level of confidence (between 0 and 1, but typically a high value, such as 0.9, 0.95, 0.99, if this interval is to be useful). The interval is a useful summary and says that we expect the variable X to be within the interval values, say, 95% of the time. The confidence intervals many people are familiar with rely on the Central Limit Theorem (CLT), which uses the normal distribution. However, we want to avoid making distributional assumptions, because intervals based on the CLT sometimes make assumptions that are too rigid in practice.

A density estimate consists of saying “the probability that X takes on value x is p”. Sometimes, it is reasonable to assume X (approximately) takes a particular parametric form, such as normal distribution, Poisson, Gamma, etc. In this case, the appropriate parameter values can be estimated from the sample. However, it is often the case that observed values do not follow a known (or simple) parametric form, and hence we may want a more general (non-parametric) method. A non-parametric density estimate typically means calculating a discrete approximation of the true density by taking many points x in the domain of the distribution (the range of allowed values) that are spaced closely together, and calculating the density at each. For instance, say X takes values between 0 and 4 (based on the observed sample), then we may specify a discretization at x=0, 0.01, 0.02,\dots, 3.98, 3.99, 4.0, and estimate the density at each point. Density estimation is implemented in functions such as R’s “density”, and in Python in statsmodels.api.nonparametric.KDEUnivariate; the discretization is usually chosen automatically in the software.

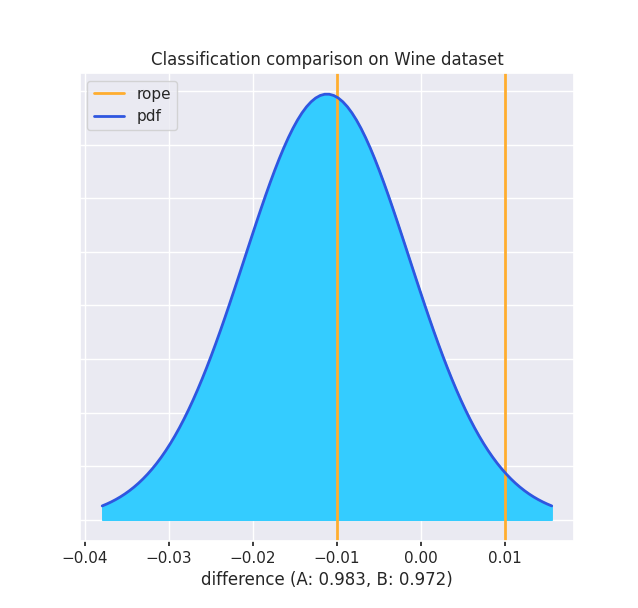

Bootstrapping is a commonly-used technique of non-parametrically estimating the density (which can then be used to construct a confidence interval) of a variable based on a sample of values of the variable. This is particularly useful if the sample is small (< 30 as a good rule of thumb) or if there is no reason to assume a particular distribution of it (for instance, the values can be a set of R^2 measures from regressions or accuracy scores of a model when applied to independent datasets).

Bootstrapping is implemented in R in the “boot” package, and in Python in scikits.bootstrap.

Say the sample consists of N values \{x_1,\dots,x_N\} of the variable X. We want to estimate the distribution of an arbitrary statistic S(X) of the variable X. If we want to estimate the distribution of X itself, we might have S be mean(X), for instance. The distribution of X is approximated by repeated resamplings of the values of the given sample. Since we don’t have access to the full distribution of X (otherwise we wouldn’t need to do this), we only use the values we have, but any statistical inference needs to be adjusted to reflect this.

The most basic form of the bootstrap consists of forming a large number R (say, a few hundred) draws of the same size N with replacement from the original sample \{x_1,\dots,x_N\}. Let r_j,\: j=1,\dots,R, be the j^{\text{th}} resampling of \{x_1,\dots,x_N\}; since resampling is done with replacement, we expect there to be repeated values of various values x_i in r_j, while some values x_i may not be drawn at all. Since we assume \{x_1,\dots,x_N\} is a random sample of X, typically the values x_i are drawn with equal weights \{w_i\}=\{w_1,\dots,w_N\}, where each w_i =1/N. However, if there is reason to assume particular values of x_i in the original sample are “more important” (for instance, maybe that x_i a classification accuracy based on a larger underlying dataset than the others), then w_i may be adjusted to reflect this.

Let s_j = S(r_j) be the value of the statistic on resampling r_j. The set \{s_1,\dots,s_R\} can thus be used to perform inference on S’s distribution. Since the R resamplings are done independently and in the same way, the values \{s_1,\dots,s_R\} are equally-weighted.

Note on the performance of bootstrapping: Bootstrapping can be computationally intensive if R or N are large. Typically, N is relatively small, and R is larger. These factors should be taken into account when considering bootstrapping.

Confidence intervals for S based on bootstrapped values \{s_1,\dots,s_R\}

Say we want to construct a 95% confidence interval for S based on \{s_1,\dots,s_R\}. The most naïve approach would be, as with the CLT normal intervals, to assume the distribution of S is symmetric, and thus our interval should be (s_{(0.025)}, s_{(0.975)}), where these are the 2.5th and 97.5th empirical quantiles of \{s_1,\dots,s_N\}; notice \textrm{Pr}\left(s_i \in (s_{(0.025)}, s_{(0.975)})\right) = 0.95, since 0.95=0.975-0.025. In Python’s “bootstrap”, this is implemented in ci=“pi” and in R, as type=“perc”.

A similar but still naïve approach is to form a “high density interval” (HDI) of a given confidence level, say 0.95. The “best” interval is the interval that is shortest among those of fixed confidence; if the distribution is symmetric, this corresponds to the symmetric “percentile” one. The HDI uses the set \{s_1,\dots,s_R\} and finds s_{(a)} and s_{(b)} such that the empirical probability between them is 0.95 and s_{(b)} – s_{(a)} is the smallest possible (tightest such interval). This is implemented in R in the HDInterval package and in Python under stats.hpd.

A better approach is the bias-correction adjusted interval (“bca”, which is the default in the Python implementation). The naïve approaches treat the bootstrapped statistic values \{s_1,\dots,s_R\} as if they have the same empirical distribution as the same statistic S(X)’s population distribution (if we could just draw unlimited X and calculate S(x)). But this is not really true, so bootstrap estimates need to be adjusted to approximate the true population distributions. The bca approach does not assume symmetry, and uses additional jackknife sampling from the original data (making N subsets, leaving one out each time). For this reason, it becomes computationally expensive to calculate the bca interval on larger samples.

Question 02: What are good ways to represent text documents (long or short) as features for model-building?

Answer:

First, a brief background: Often, we want to perform analysis on a series of texts, such as calculating similarity between them for clustering, or building a classification model based on shared words. Usually this is based on analysis of the words in a document, and a matrix representation is used where each row corresponds to a document, and each column to a word in the vocabulary, so the words are model features.

In many applications, significant text processing is required. For instance, we usually remove stopwords (common words like “and”, “the”, which don’t give any special information about the text). Typically, lists of common stopwords in different languages are available in software implementation. Also, stemming is done to reduce the feature space by trimming words down to their “stems”. For instance, “working”, “work”, “worker”, “works”, are all related, and may be shortened to the same word “work” by removing suffixes. Text parsers also have rules to determine what is a “word” by parsing punctuation marks, space, etc. Here, we will not deal with these issues, and assume the user has done any necessary pre-processing.

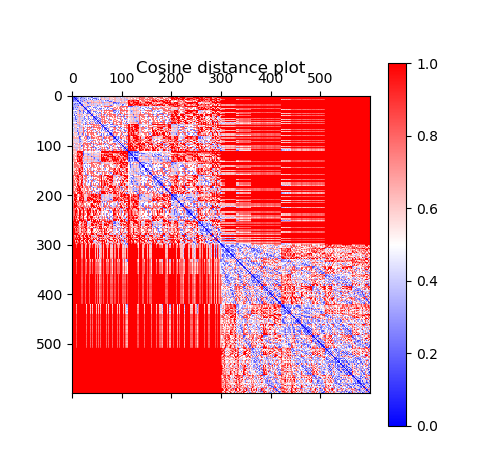

In a given row of a matrix, usually a word’s column is zero if the document does not contain the word, and nonzero if it does. Usually, the matrix is sparse (most of the entries are 0-valued) because the set of possible words used (which usually determines the number of columns) is large relative to the number of unique words (number of nonzero entries) in each document. That is, each document typically only has a small subset of the possible words. Furthermore, most documents will only share few words, if any.

Measuring similarity

However, the number of shared words may be important in determining similarity between rows. Therefore, it is common to use distance measures like cosine distance for texts, as opposed to the more common Euclidean distance. This is because 0-valued entries in rows mean the word is not present. If two documents both have 0s in many of the same columns (remember, the matrix is sparse, so this will happen a lot), and Euclidean distance will treat these rows as similar. However, we want to measure similarity based on the number of shared words relative to the total number of words that appear in at least one of the documents, as opposed to relative to the whole vocabulary size, as in Euclidean distance. This is what cosine distance does, by taking the dot product (product of entries in columns that were nonzero/present for both documents), relative to the magnitudes of the vectors (related to only the nonzero entries in each).

Matrix encoding

There are a few options for document encoding. Expressing each word as a feature or column is called one-hot encoding. Depending on the modeling goal, you may choose to encode a row entry as binary (either the word appears, or not), or as the frequency the word appears in the document. One particularly popular one is TF-IDF (term frequency-inverse document frequency). This means that a word’s entry depends on how often it occurs in the document (term frequency) but accounting for how often that word appears overall in the whole set of documents, or “corpus” (inverse document frequency). Recall, models usually succeed by identifying the important words that tell us a lot about documents, and these tend to be the more infrequent words. So, words that have high frequency but appear often in the corpus, such as “the”, aren’t very useful at all, but if a word appears often in one document but is overall infrequent, it is important for that document. Thus, two documents that share the same relatively infrequent word many times (e.g., two documents that refer to “photosynthesis” a lot are likely to be related). Keep in mind that what is a common word depends on the corpus, so while “cell” may be an infrequent word in newspapers, in a medical journal many documents will contain this word, and thus it will be of less importance in comparing documents. In the medical journal, all documents are already of the same “medical” category, but we may need to differentiate between more-narrow sub-categories.

TF-IDF is implement in Python’s sklearn module, where the vectorizer automatically learns the vocabulary from a corpus of texts, and constructs the matrix encoding. This is just the basic idea, but there are more specialized modifications of the algorithm.

Feature Hash encoding

- There are several drawbacks of TF-IDF and other one-hot encoding methods:

- If they are used to, say, predict or cluster with new documents that have any words that have not been seen before, and thus have not been encoded to features, the model will not work. This is because the number of matrix columns is determined by the number of unique words in the training corpus. You could just add new 0 columns to the original matrix, but this is not ideal.

- If new documents are added to the training corpus (with no new words), the matrix entries have to be recalculated because the frequencies of the words in the corpus (document frequency) has changed. We wish to avoid extra recalculation when new data is added.

The first drawback may be the more serious, because you want to keep your model useful in the face of new data.

Hash functions provide one good approach. Feature hashing is similar to one-hot encoding except that the user first (before training a model) specifies the number of columns in the data matrix. Typically, this has to be a big number, often around a million or so. It is best to have it be 2 raised to some integer power. On the one hand, a larger matrix needs to be constructed, but the hash function allows new features to be mapped to the existing space, thus allowing new words and documents to be incorporated without having to completely rebuild the model.

Briefly, what is a hash function? Hashes map objects into a span of existing space (here, for instance, the space of columns) in a “hidden” way. For instance, when you construct a dict object in Python (the equivalent in Perl is a hash object), the keys are stored in an order determined by a hash function, not in the order you created them. Say you enter the following commands:

#create dict

tmp = { "hello": 2, "world": 3 }

tmp.keys()

#dict_keys(['hello', 'world'])

print(tmp.keys()[ 0 ]) #try to access the first key, you may expect “hello”

#TypeError: 'dict_keys' object does not support indexing

print(list(tmp.keys())[ 0 ])

#'hello'

Trying to access the keys by their index doesn’t work, since the order of internal storage doesn’t mean anything. You should access them just by iterating over tmp.keys() rather than over the indices.

Feature Hash encoding in Python

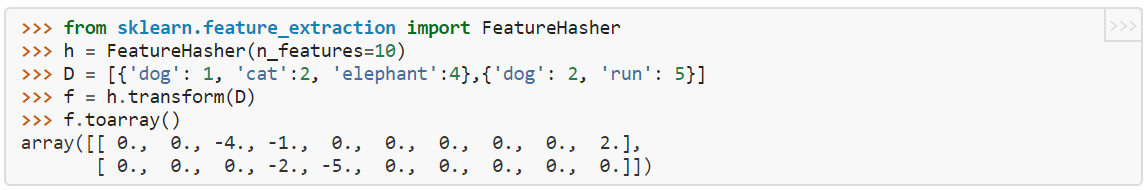

Feature hashing is implemented in Python in sklearn.feature_extraction.FeatureHasher. It uses the MurmurHash algorithm to assign indices. The following example is given in the documentation above:

Here, dictionary D contains two “documents”, one of which has the words (“dog”,”cat”,”elephant”), the second has (“dog”,”run”). Each word is represented as a dict key, with the value being the frequency of the word in the document. “h” is initialized as a hash with 10 features (notice we recommend that the number of features be an exponent of 2, due the values being positive or negative, which here 10 is not). f is the resulting 2xn_features array where D is encoded according to h. Each word gets mapped to a column: here elephant -> column 2, dog -> column 3, run -> column 4, cat -> column 9. Notice that in this simple case, there are no collisions, since each word gets mapped to its own column (feature). For a fixed vocabulary size (here, 4), the fewer the number of features, the more likely there are collisions. For n_features =4…9, there are still no collisions, but obviously setting n_features =3 (< vocab size) forces a collision. We would get the matrix

array([[-1., 2., -4.], [-2., -5., 0.]])

This means that the “run” (=-5) and the “cat” (=2) words have been assigned to the same feature (a collision of assignment), which means we have lost the information of them being two different words. The values are assigned either negative or positive values so that in the event of collisions, there will be some cancellation of values so that a feature doesn’t artificially get higher weight by getting more values assigned to it when there is a collision.

Generally, the number of hash features needs to be set much higher than the number of words, to reduce the number of collisions. If the number of words is known to be small and of fixed size, one-hot encoding may be better than hashing. The blog article contains some good mathematical experiments relating the number of features and expected collision.

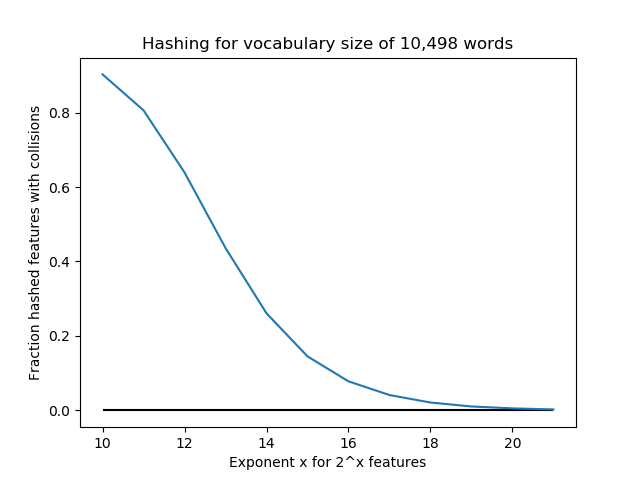

Below, we conduct an experiment with a vocabulary size of 10,498 “words”. For n_features = 2^k for k=10,…,21, we use FeatureHasher to assign these words to features. For these values k, the number of features are [1024, 2048, 4096, 8192, 16384, 32768, 65536, 131072, 262144, 524288, 1048576, 2097152]. The fraction of collisions is (10,498 - #features)/10,498. Notice that for k=10,…,13, n_features < 10,498, so there are guaranteed to be a large number of collisions (at least 50%). We see that increasing the number of features exponentially quickly lowers the number of collisions, so perhaps 2^18=262,144 or so is enough for this application. But in this case, we could have done one-hot encoding and saved a lot of storage space.

Question 03: I have some data I am monitoring over time. It is possible that there will be some change to the data, and I want to detect this change. I also want to have statistical guarantees about the accuracy of my decision, but want to make as few assumptions about the data as possible. What is the correct way to formulate this problem?

Answer:

We will begin by describing a general formulation and discussing some of the general issues that occur in sequential hypothesis testing. Then, we show how our formulation can be extended to applications such as detection of changes when data arrive in batches, or if you want to detect change relative to some withheld baseline sample.

- Basic setup:

- Let t=1,\dots,T be indices of time

- Let X_t be some univariate value observed at t

- We want to conduct a statistical test of a “changepoint”, meaning that for some index k\in \{2,\dots,T\}, X_k (or some subset \{X_k,\dots,X_{k+j}\}) is significantly different from the preceding set \{X_1,\dots,X_{k-1}\}.

Statistical guarantees

What do we mean when we say we want a statistical guarantee about when we declare a change has happened? Typically, we can never be absolutely sure about a decision, but we can quantify the degree of confidence in it, say 95% that we are correct (or, less than 5% incorrect); this allows us to make a good statistical decision.

Now, what does it mean for a decision to be “wrong”? We have to make a decision between “no change” (“negative”) or “change happened” (“positive”). A mistake happens if either

- A change has not happened, but we said it did (“false positive” declaration, also called a “Type 1 error”)

- A change happened, but we failed to say it did (we declare “negative”, which is not true, so this is a “false negative”, also called a “Type 2 error”)

- At some time k < T, we make a final declaration that a change has occurred. We stop the process here, and do not observe the rest of X_{k+1},\dots,X_T to be able to re-evaluate our decision.

- We reach t=T without declaring a change, in which case we say, “no change has occurred”.

- A single hypothesis test (e.g., two-sample t-test) is based on assuming you observe a complete (pre-specified) sample of size N (in our case, T), and make a single decision, whose Type-1 error is controlled at a pre-specified level α.

- If you prematurely, at n<N, “peek” at the data by conducting a hypothesis test on the data up to that point, then deciding if the data appear to be significantly different, you are likely to make a mistake. Why? This individual test might be okay, but if you repeatedly perform the test, each of which has probability α of false positive, and the change occurs at some k, the probability of making at least one mistake is much higher, more like 1-(1- \alpha)^k (approximately). See this article for additional explanation.

Typically, in statistical hypotheses, since the “negative” represents the status quo, we want to protect against a false positive mistake more than a false negative. For instance, if monitoring a computer network, “positive” might mean a hack has happened. However, every time we declare a hack has happened, this may have serious consequences (e.g., spending time and money investigating). Therefore, typically we want to have a guarantee of the form “if there has been no change (‘negative’), we have only a 5% chance of making a mistake by declaring a change has occurred (‘positive’)”. Obviously, we also want to make sure that if a hack has happened (‘positive’), we have a guarantee that we have not missed it (‘negative’), that is, the reverse. However, it is usually easier to guarantee the first condition. This is called controlling the false positive or Type 1 error probability.

What does it mean to conduct the test sequentially?

At each time t, we must make the decision “yes or no, based on the data X_1,\dots,X_t, so far, has a change occurred?” We will assume that one of the following happens, either:

A Type-1 error occurs if we declare time t=k to be a changepoint, but either (1) a change occurs at a later t > k, or (2) a change does not occur before T. Both are equivalent in our case, because we do not observe the data after k, and we assume we don’t look into the future. We want to control the probability of this mistake to be below some value α (say 0.05, or 5%). If a change has occurred at a time t, we also want to detect it as quickly as possible (i.e., declare at k, where k – t is as small as possible, but we can’t have k < t or this would be a mistake).

Why do I have to consider a special method for sequential change detection?

Data change point detection is essentially a distributional modeling problem, where a change is defined as a change in the distribution of the data, perhaps relative to some fixed point, or relative to the most recent values. Consequently, how you detect a change depends on how you model your data (univariate vs multivariate, parametric vs nonparametric, is data arriving in batches or not, etc.). You may think that, say conducting a set of standard hypothesis tests vs some fixed baseline X_0, one at each point in time, say a two-sample t-test, is valid if you want to control your overall probability of making a mistake. (To conduct a t-test, you need samples rather than single points anyway, an issue we will discuss below).

A key point is that sequential decision-making is fundamentally different than combining a sequence of standard non-sequential (meaning, they don’t take time into account) hypothesis tests, as above. This is for a few reasons:

Essentially what we need to do in a proper sequential test is to be able to “look” back at the data at each time t, while simultaneously guaranteeing that if we make a decision that change has happened, there is only a 5% (or so) chance that change has not happened. That is, this guarantee applies over all times t, but that this guarantee exists at the single time we make a decision. This property can be verified by generating simulated data repeatedly (e.g., 1000 times) from the process, with the same time of change, and you should see that in approximately 5% of runs, if a change point is identified, it will be a false positive, before the actual change point. One way this method might work is by having the hypothesis test decision thresholds shift over time, as opposed to the standard non-sequential methods, in which the thresholds stay constant.

cpm package for R

One particular implementation of a sequential detection method that is (1) nonparametric, (2) univariate, and (3) offers solid sequential statistical guarantees is the cpm (change point model) package by Gordon Ross (2015). A package overview is given in the article “Parametric and Nonparametric Sequential Change Detection in R: The cpm Package” (Ross, 2015), and the theory is outlined in “Two Nonparametric Control Charts for Detecting Arbitrary Distribution Changes” (Ross and Adams, 2012).

The following summarizes the key points of the article:

We consider sequential detection as a sequence of hypotheses at times t, where

h_t is the hypothesis test decision threshold at time t, for the hypothesis:

H_0: {no change happened as of time t yet} vs H_1:{change happened at some k < t}

At each t, a statistic W_t or D_t (the authors allow use of either a Cramer von Mises or Kolmogorov-Smirnov nonparametric distribution distance measure) is calculated for the hypothesis test at time t. Let’s assume W_t for now (CvM), but the same applies for the KS measure. The statistic W_t represents the result of looking back at all past times k < t, and seeing which k* is the most unusual (and hence the best candidate for a change point, if it indeed happened already, which is unknown). Our sequential test is then determined on the basis of the sequence of \{W_1,\dots,W_t\}. If at some t, the W_t appears to be unusual relative to the previous values (how we determine unusual will be discussed below), then we say that the distribution of data seems to have changed.

The key part here is that the hypothesis test thresholds h_t depend on time. This allows all of the following probabilities to hold at a constant α at all times (Equation 2 in the paper):

\text{P}(W_1 > h_1\mid \text{\underline{no change at 1}}) = \alpha and

\text{P}(W_t > h_t \mid W_{t-1} \le h_{t-1}, \dots\text{ and } W_1 \le h_1,\text{ and \underline{no change before t}}) = \alpha for all t

If W_t > h_t, we decide there has been a change as of time t. But the premature detection error probability at t (i.e., that W_t > h_t when a change has not occurred yet), given that you have reached t without detecting so far (i.e., W_k < h_k for all times k< t), holds at \alpha for all t by virtue of the thresholds h_t changing over time. This is the Type-1 error probability guarantee we are seeking. Keeping h_t constant (e.g., \pm 1.96 for \alpha=0.05), as the naïve non-sequential approach suggests, would result in many false detections since h_t (if left free to vary) would rise above the constant value.

Types of change detection in cpm:

The cpm method detects change points by unusual changes in the values of W_t or D_t relative to the previous values. The good thing is that this implementation allows for various methods of measuring distributional difference. If you know what type of change to expect at a change point (e.g., change in X_t mean, variance, etc.), you can choose the metric that is most sensitive to those.

The basic function form is detectChangePoint(x, cpmType, ARL0=500, startup=20, lambda=NA).

Here x is the data stream of X_t. The argument cpmType is the metric for determining change. Options like “Student” or “Bartlett” detect mean and variance changes, respectively, and “Kolmogorov-Smirnov” and “Cramer-von-Mises” can detect more general changes, though they will be slightly slower to detect, say, a change in the mean. Note, the KS and CvM here refer to methods of detecting a change in the W_t or D_t statistics, and not the methods to construct W_t or D_t themselves, referred to earlier.

As an additional note, the method requires an initial burn period, before which it cannot detect a change, since it needs a big enough sample of \{W_t\} to establish a typical baseline. This is specified by the parameter “startup” (default=20, also the minimum value). The significance is that \alpha=1/\text{startup}, so in this case the default \alpha=0.05 as is typical. Essentially, to control Type-1 error at a lower rate, you have to have a longer startup period, for instance 100 for \alpha=0.01.

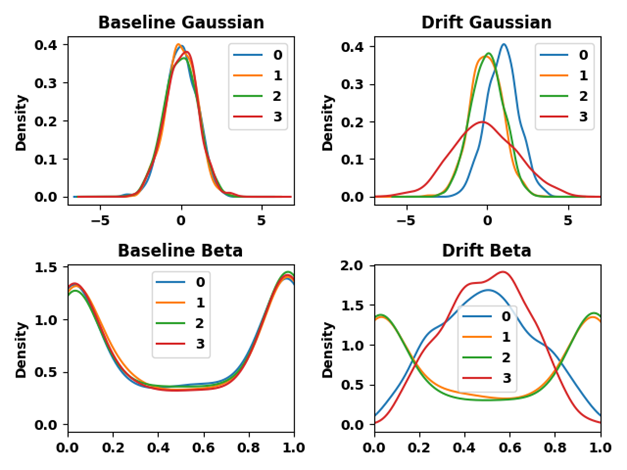

How cpm can be adapted to drift detection vs a baseline sample

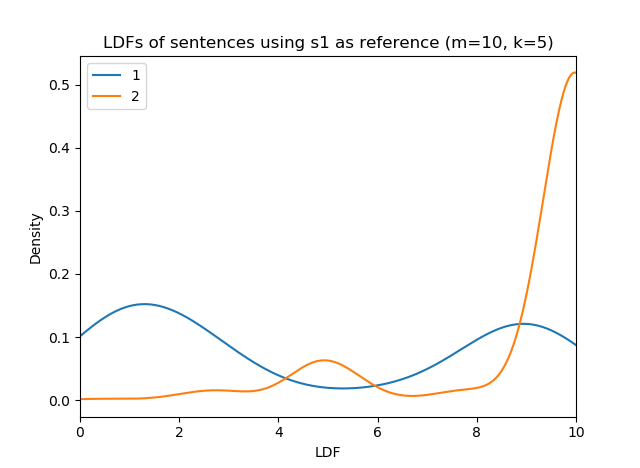

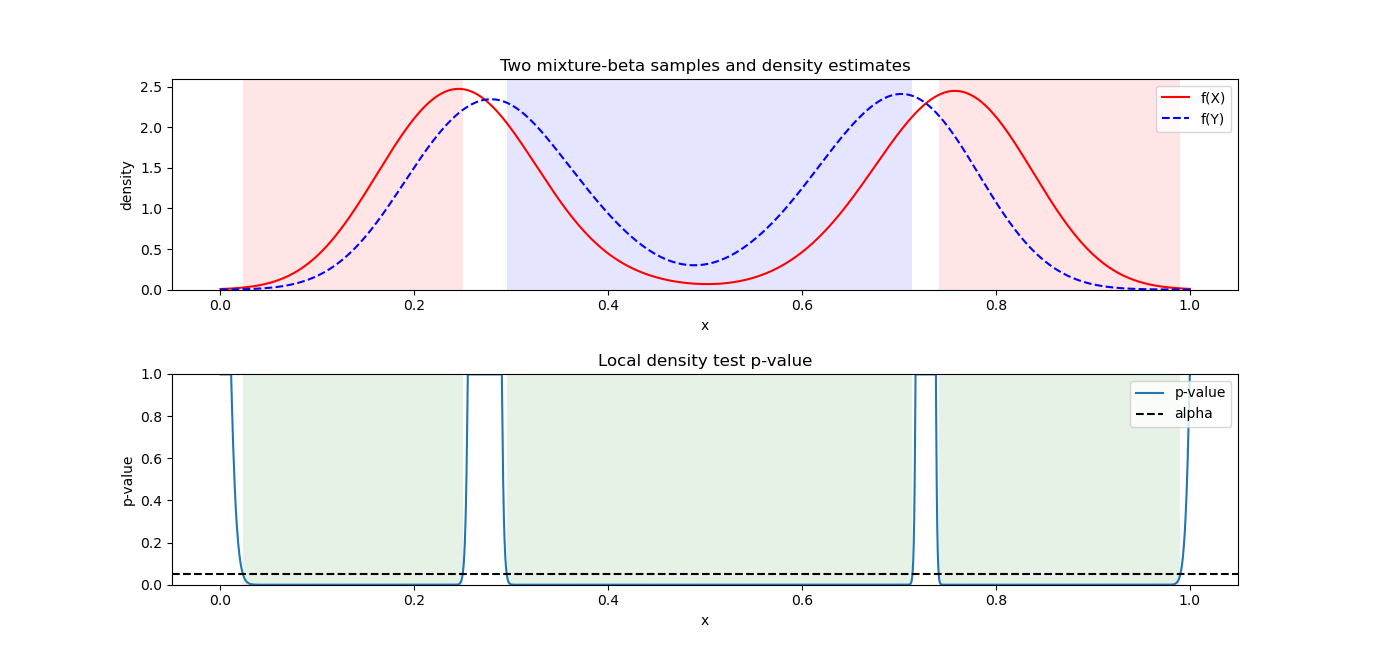

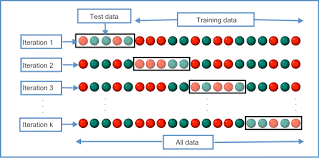

The above general discussion of the cpm package formulates detecting change points by differences among the sequence of data \{X_1,\dots, X_t\} observed up until each time point t. We would like to adapt this setup to where we have a baseline sample \{X_0\} and we want to detect drift (divergence) by comparing \{X_1,\dots,X_t\} (production data) to this baseline. Recall here thatx_iare assumed to be univariate.

We can reformulate this by assuming that our observed data is actually some Z_1,\dots,Z_{T*}, which we consider in batches of fixed size m. For instance, the j^{\text{th}} batch is Z_{j*m},\dots,Z_{j*(m+1)-1}. Let T*=T\times m for simplicity, such that the windows of size m exactly cover the data \{Z\}. Let’s assume the baseline sample is a set of values \{Z_0\}; this sample does not have to be of size m.

One way to identify drift is to see not whether the production data \{Z\} seem to be different than the baseline sample \{Z_0\}, but whether the nature of their difference changes. It is reasonable to assume that the Z data at the beginning of production are similar to the baseline.

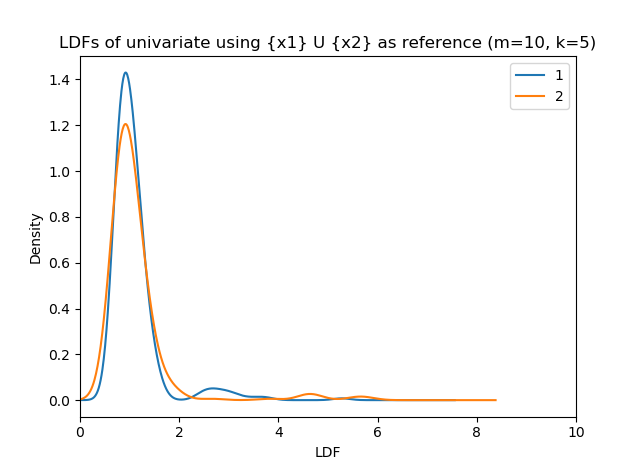

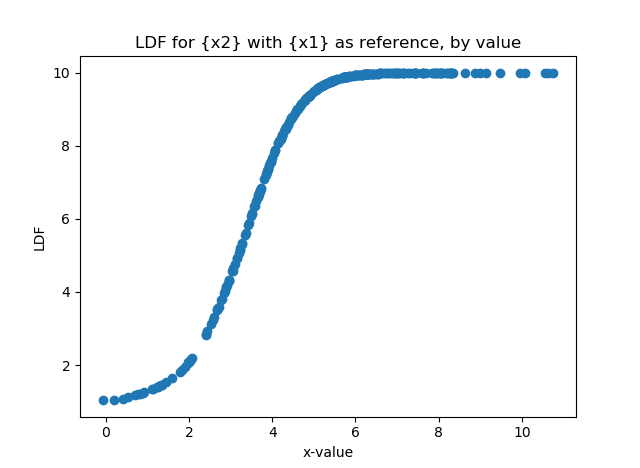

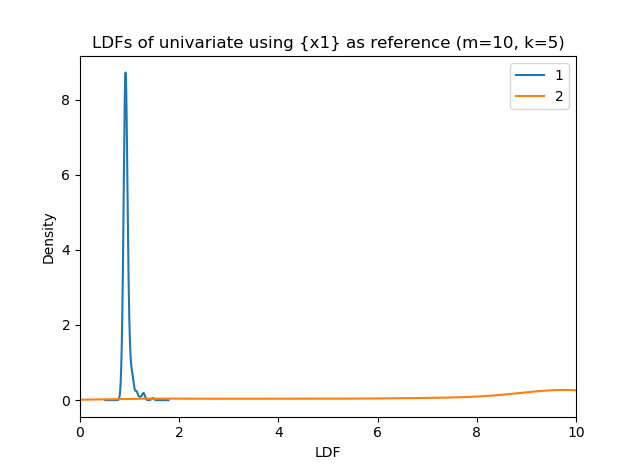

Let \text{Dist}(a, b) be some distance measure between samples a and b, such as Wasserstein or CvM distance. It’s better if this is the measure itself, and not, say, the p-value for a hypothesis test based on the measure, which is a less powerful indicator. Then, let the values \{X_1,\dots,X_T\}, on which we actually perform the sequential test, be

X_t = \text{Dist}\left(\{Z_0\} \:,\quad \{Z_{t*m},\dots,Z_{t*(m+1)-1}\}\right)

that is, the distance between the baseline and the t^{\text{th}} batch of Z. This distills the t^{\text{th}} batch of Z into a single value. The reason we have considered the data originally in batches is that an effective distance metric of comparison with the baseline can only be calculated if the data are samples and not single values. Drift can be indicated by whether the distance metric suddenly changes in value, assuming that similar values of distance will describe similar production batches (i.e., that it is a sensitive measure). Probably the best way to detect such a change is through a sequential t-test, which will be sensitive to changes in the mean value of the distance metric (which itself is a nonparametric measure of distance between the samples, rather than being based on the mean of the samples, so that it can capture arbitrary changes in the data).

Question 04: I have two samples, each representing a random draw from a distribution, and I want to calculate some measure of the distance between them. What are some appropriate metrics?

Answer:

The correct approach is to use a distance metric that is nonparametric, and takes into account the shape of the distributions estimated by the samples.

We also assume that the two samples, denoted \{x\} and \{y\}, are of arbitrary sizes n_x and n_y, and represent random draws of two random variables X and Y, respectively. The fact that we allow the sample sizes to differ means that we will not consider situations of paired data samples, where, by definition n_x=n_x=n; for instance, there are n individuals and \{x\} and \{y\} consist of 1 observation for each individual. One example is where \{x\} and \{y\} represent pre- and post-treatment measurements on each individual in a clinical trial. In this case, a test such as paired t-tests can be used.

A further assumption is that we want a symmetric measure. Symmetry means that \text{dist}(x,\: y)=\text{dist}(y,\: x),\:\: \forall x, y, so the order of which sample we compare to the other does not matter. By definition, distances must be symmetric (though some do not satisfy the triangle inequality requirement necessary to be metrics). There are other classes of distributional comparison measures, such as divergences. Kullback-Leibler divergence, for instance, is an information-theoretic measure that measures the amount of information, or entropy, lost when using one distribution instead of another. These measures are not generally symmetric. For more information, see this article.

We will consider several situations: (1) univariate continuous, (2) rank-based comparison of univariate continuous samples, and (3) multivariate data.

1: Distances between univariate continuous-valued samples

- Kolmogorov-Smirnov (KS): Consider a random variable X with density (distribution) function f_X. The cumulative distribution function (CDF) of X is denoted F_X, and is defined as F_X(x)= \text{Pr}(X \leq x). The KS statistic calculates the distance between two random variables X and Y as \text{sup}_z |F_X(z)-F_Y(z)|. That is, it is defined as the greatest absolute value difference between the CDFs of the two random variables over their domains. Here, F_X and F_Y can be the empirical CDFs from the two samples \{x\} and \{y\}, or one or both of them can be the CDF of a specific parametric distribution. Thus, it can be used as a goodness-of-fit measure to see if a sample appears to be distributed similar to, for example, a normal distribution. The KS distance is bound in the interval [0,1], and is the basis of a statistical test to decide if two distributions are similar enough (e.g., if two samples may come from the sample distribution). While the KS statistic can measure arbitrary differences in distribution, it is also known to be conservative (i.e., it requires a larger amount of data to detect differences).

- Anderson-Darling (AD) and Cramer von-Mises (CvM): The AD statistic is similarly based on distances between the CDFs (F(x)) of distributions, and like the KS statistic, can be used as a goodness-of-fit test between a sample and a given parametric distribution. Compared to KS, it is known to achieve good results with less data, and thus the test converges more quickly. The AD statistic uses a weighting function w(x) on the values x of distributions. The weights tend to put more emphasis on differences at the tails of the distributions. The CvM distance is a special case in which all the weights are equal. However, the values of the statistics are not very interpretable. The two-sample AD test (i.e., for measuring distance between two samples) is a relatively new innovation, and is implemented in software such as scipy.stats.anderson_ksamp (an extension to k>1 samples); here, the normalized statistic is returned, not the actual distance. This paper compares performance of KS and AD tests.

- Wasserstein (earth-mover’s) distance: Wasserstein distance (WD) is a metric that can be used for both univariate and multivariate arbitrary samples. Imagining each sample’s distribution as a pile of dirt, WD is interpreted as the total distance needed to move each grain of dirt from one pile to form the other pile (taking into account the shape and shift of each distribution). For this reason, it is often called “earth mover’s distance”. In R, this is implemented in the “transport” package under functions “wasserstein1d” and “wasserstein” (for higher dimensions, which appears to require equal sample sizes). In Python, scipy.stats.wasserstein_distance implements the 1-D case. The 2-D empirical distance is implemented in ot.emd (ot=“optimal transport”, emd=“earth mover’s distance”). Also, ot.gromov.gromov_wasserstein and ot.gromov.entropic_gromov_wasserstein compute an extension of this method, called Gromov-Wasserstein, which allows the dimensions of the two samples to differ.

- Total variation distance (TV): The L-1 norm of a vector is the sum of the absolute values. For two samples, the TV distance is ½ the L-1 norm of the difference in their densities. The TV distance is restricted in [0,1]. However, it is known to be sensitive, in that two samples that appear to have similar shapes may have a large distance according to this measure.

2: Distance between distributions in terms of ranks

This method uses the empirical samples observed, but unfortunately it does not reflect changes in magnitude at the edges (much like the median of a sample is not affected if the largest values increase), which affects the empirical distribution, but not the relative ranks of observations. It can thus be used for ordinal data. The rank of a value x in a set is the index it appears in when the values of the set are ordered in ascending order. By an analogy, let \{x\} and \{y\} represent the finishing times of racers in two teams, where a lower value of rank means faster time. If the two teams are equal in skill (i.e., these two sets are iid samples from the same distribution), when \{x\} and \{y\} are pooled and the values ordered, and the ranks 1,\dots,(n_x+n_y) calculated, we expect the ranks of the two groups to be roughly evenly mixed among the values \{1,\dots,(n_x+n_y)\}.

The Mann-Whitney U test is a test of this hypothesis. The resulting statistic U calculates the amount of one set’s ranks that are larger than the other. In the test formulation, this statistic, when normalized with its mean and standard deviation, has an asymptotically normal distribution. When U is divided by the product n_x\times n_y (i.e., the total number of pairwise comparisons), giving a measure denoted \rho, we get a value that is in [0,1]. Depending for which variable X or Y U is calculated, this represents the value \text{Pr}(X>Y) + \text{Pr}(X=Y) (or the complement) if they are discrete-valued, and just \text{Pr}(X>Y) for continuous-valued variables (since \text{Pr}(X=Y)=0 in this case).

This measure has the interpretation of the overlap in distributions (with direction), which is different from the overlap in density functions. A value \rho=0.5 (i.e., U is half of n_x\times n_y) means there is basically perfect overlap in distributions, since one variable is equally likely to be larger than the other. This corresponds to zero distance. A value of \rho\approx 1 means X is almost certainly larger than Y, so the distance is large. If U is taken to the minimum of the U-measure calculated with respect to the two variables (call these values U_1 and U_2), then rescaled here, you get a directionless distance measure. This measure is developed as a(X,Y) in “The area above the ordinal dominance graph and the area below the receiver operating characteristic graph” (Journal of Mathematical Psychology) by Donald Bamber.

A quick R illustration is as follows:

#generate random draws

nx <- 100

ny <- 300

x <- rnorm(n=nx, mean=0)

y <- rnorm(n=ny, mean=1)

#name the vectors so we keep track of

#which value came from which variable

names(x) <- rep("x", nx)

names(y) <- rep("y", ny)

#combined vector keeps the names of the values

combined <- c(x, y)

nc <- names(combined)

#sequence of “x”,”y” in order of the values

nc <- nc[ order(combined) ]

#U-statistic is, for each member of x and y,

#how many of the other variable it is lower than,

#then take the sum

U1 <- sum(vapply(which(nc == "x"), function(ii) sum(nc[ ii:length(nc) ] == "y"), 1))

U2 <- sum(vapply(which(nc == "y"), function(ii) sum(nc[ ii:length(nc) ] == "x"), 1))

U <- min(U1, U2)

U

#perform Mann-Whitney test, “W” should be the same as U above

wilcox.test(x, y, alternative="two.sided")

#estimate of Pr(X > Y)

#should be >0.5 since x2 has higher mean

rho <- U/(nx*ny)

rho

3: Multivariate data distance measures

- For multivariate data (even d=2), the idea of comparison across dimensions is difficult to define, which is why many of the univariate metrics above cannot be extended easily to multivariate data. For instance, the CDF, the basis of the KS/AD/CvM measures above, is defined for a vector \boldsymbol{x}=[x_1,x_2,\dots,x_d] as F_X(\boldsymbol{x})=\text{Pr}(X_1\leq x_1\text{ and }X_2\leq x_2\dots\text{ and }X_d\leq x_d). That is, the CDF is the probability on a vector of values \boldsymbol{x} is the probability that all dimensions are simultaneously \leq their respective values of x. The notion of being larger or smaller on “almost all dimensions” is not well-defined at least in this respect, and thus defining the distance of X from Y across multiple dimensions d in terms of the differences between their CDFs is difficult. Furthermore, there is the problem that multivariate density estimation from a sample suffers from the curse of dimensionality, under which distances in high dimensions often do not do well in representing distributional differences. Nevertheless, there are several options:

- Wasserstein distance: As mentioned above, Wasserstein distance is an intuitive measure of the “effort” to move the mass of one distribution to the other, and thus of the distance. It is defined on univariate as well as multivariate data. However, in higher dimensions, Wasserstein distance is known to have high computational cost.

- Maximum Mean Discrepancy (MMD): MMD is a measure that is becoming popular in the ML literature due to its application to many different domains (e.g., text and images), not just numeric data. MMD uses various kernel functions, which can be customized to the domain. A kernel function basically is a way of expressing belief about the distance or relationship that can be expected between two vectors; that is, the distribution of the distance. It serves as a way of embedding the sample \{x\} in a distribution space. A common example is Gaussian kernel between vectors \boldsymbol{x} and \boldsymbol{y}, which takes the form k(\boldsymbol{x},\: \boldsymbol{y})=\exp{\left(- \frac{\|\boldsymbol{x} - \boldsymbol{y}\|^2}{\sigma}\right)} . See http://www.gatsby.ucl.ac.uk/~gretton/papers/testing_workshop.pdf (slides 10-11) for an illustration. The formula for an empirical estimation of MMD from samples \{x\} and \{y\} is k(\boldsymbol{x},\boldsymbol{y})=\frac{1}{n_x^2}\sum_{i=1}^{n_x}\sum_{j=1}^{n_x}k(x_i,x_j) + \frac{1}{n_y^2}\sum_{i=1}^{n_y}\sum_{j=1}^{n_y}k(y_i,y_j) -\frac{2}{n_x n_y}\sum_{i=1}^{n_x}\sum_{j=1}^{n_y}k(x_i,y_j). On a technical note, this formula has a small bias because it includes k(x_i, x_i) and k(y_j,y_j), for which the distance is 0, and thus Gaussian Kernel is 1.

#example of manually calculating MMD

#create 3-dimensional uniform random draws of size 100 and 70

#dimensions should be the same

nx <- 100

ny <- 70

x <- matrix(runif(3*nx), nrow=nx, ncol=3)

y <- matrix(runif(3*ny)+1, nrow=ny, ncol=3)

#R function to calculate MMD between x and y, using

#Gaussian kernel (rbfdot=radial basis Gaussian function,

#the default) with sigma =1

library(kernlab)

mmdo <- kmmd(x, y, kernel=rbfdot, kpar=list(sigma=1))

mmdo

#the important value here is the first order MMD statistics

#which is the estimate of the MMD^2

mmdstats(mmdo)[ 1 ]

#functions to compute Euclidean distance and Gaussian Kernal

euc <- function(a,b) {

sqrt(sum((a-b)^2))

}

gkern <- function(x,y,sigma=1) {

exp(-1*(euc(x,y)^2)/sigma)

}

#MMD is calculated by taking the kernel function across all

#pairs of x and y with themselves (including the kernel of a value

#with itself, which here becomes 1 because the distance is 0 and we

#take the exp) and with each pair of x and y values

#then we take the sum across each

kxx <- sum(vapply(1:nx, function(ii) sum(vapply(c(1:nx), function(jj)

gkern(x[ ii, ], x[ jj, ]), 1)), 1))

kyy <- sum(vapply(1:ny, function(ii) sum(vapply(c(1:ny), function(jj)

gkern(y[ ii, ], y[ jj, ]), 1)), 1))

kxy <- sum(vapply(1:nx, function(ii) sum(vapply(1:ny, function(jj)

gkern(x[ ii, ], y[ jj, ]), 1)), 1))

#calculate the expected value (empirical mean) by dividing

#by the number of pairwise comparisons

mmd_est <- (1/(nx^2))*kxx + (1/(ny^2))*kyy - (2/(nx*ny))*kxy

print(“manual calculation”)

sqrt(mmd_est) #turns out to be mmdo

print(“kernel test”)

print(mmdo)

The crucial aspect of using kernel methods (whether in this application or in general), is that a kernel function k(\boldsymbol{x}, \boldsymbol{y})=\phi(\boldsymbol{x})^T\phi(\boldsymbol{y}), where \phi is a mapping function of inputs \boldsymbol{x} into some feature space, usually higher or infinite-dimensional, and the single value returned by the kernel represents the dot product the respective outputs of the vector \phi. For instance, if k(\cdot,\cdot) is some function that can be represented by an infinite Taylor expansion, such as the exp function, then \phi is an infinitely-long vector, each element of which is some function of the input \boldsymbol{x}, such as the positive integer powers of x (by the Taylor expansion), each of which we can consider as a feature transformation. The new feature space is the collection of all these transformations. The key part is that the kernel can use the results of this infinite-dimensional space \phi by using the kernel function, without having to explicitly compute all of the component features. Kernel methods can be used in classification problems to create classification boundaries in a transformed feature space when separability is impossible in the original space. The output space of the kernel function in the case of MMD is called a reproducing kernel Hilbert space (RKHS). See slides 9-11 of http://www.gatsby.ucl.ac.uk/~%20gretton/coursefiles/lecture5_distribEmbed_1.pdf for an illustration. See https://stats.stackexchange.com/questions/152897/how-to-intuitively-explain-what-a-kernel-is and https://stats.stackexchange.com/questions/69759/feature-map-for-the-gaussian-kernel for additional details about kernels. The technical details about kernels are not necessary to understand the MMD.

Question 05: I have multiple independent distributions (i.e., random variables), or multiple independent samples (i.e., draws from random variables). I want to estimate the distribution of some combination of these. How do I do this?

Answer:

Estimating the distribution of a combination of samples or random variables is very straightforward, if independence can be assumed. We will begin by illustrating the reverse problem, that of estimating a mixture density model on a single density.

Mixture models: De-constructing a density into components

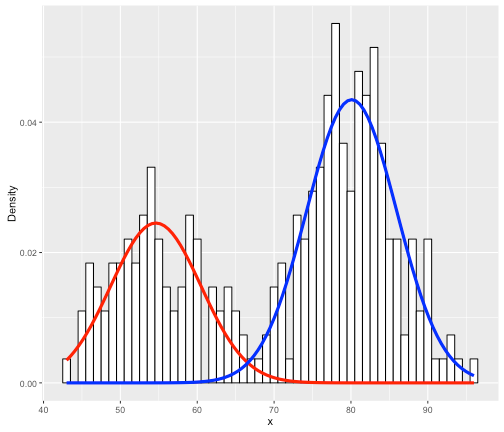

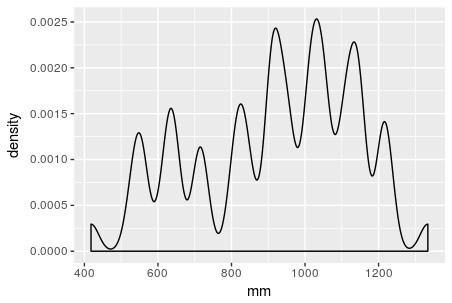

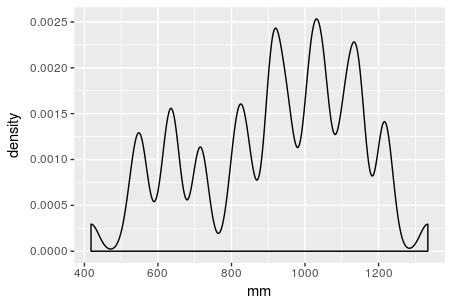

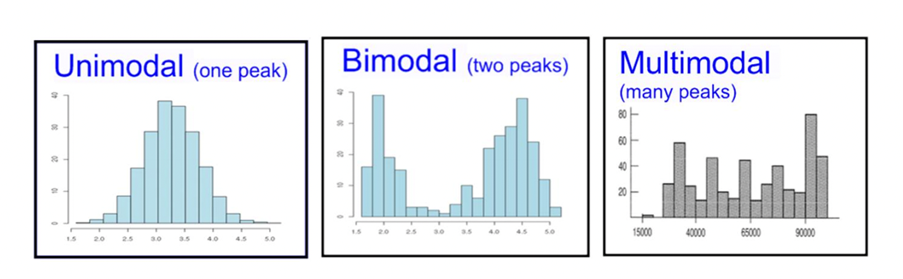

Say you have a density function, typically one that is estimated based on a sample of values (histogram). These densities are often multimodal and “bumpy”, so they cannot be represented as a single parametric density. You want to express the overall density as being the weighted combination of several other densities. Possibly, each of these component densities represents a sub-group of items.

For instance, say your data are the grades (0-100) on a final exam from students at a university, (see the histogram below). Clearly, it is bimodal. We want to construct the overall grade density as being constructed from several, perhaps two, separate densities. Typically, you must specify the parametric form of the components, usually Gaussian/normal. This is called building a finite (since there are a finite number of components) mixture model for your data. In the image below, you can see that the histogram can be approximated as a combination of blue and red normal density functions. Here, the blue peak is more prominent, so that component would receive a higher weight. All density functions must have area=1 under them, but here the component densities appear as different sizes because they are differently-weighted, to better line up with the curvature of the data they represent.

- There are several reasons you may want to do this:

- It is possible that the components may actually represent distinct sub-populations, say, classrooms 1 and 2, where the grade performance is significantly different between them, so they are represented by different distributions. Maybe you want to try to reconstruct the group memberships by the mixture, then see if this corresponds to actual group membership.

- More likely, your data represents some type of true population distribution, which you want to model. The total data density cannot be modeled as single parametric distribution, but can be modeled as a (weighted) mixture of parametric densities, such as normal distributions. That is, the mixture serves as a parametric approximation of the density, but the components do not necessarily represent sub-groups, rather just help approximate the density’s shape.

There are some more recent methods to deconstruct data into a finite mixture of unspecified densities. See for instance R’s mixtools package (https://cran.r-project.org/web/packages/mixtools/vignettes/mixtools.pdf , page 10-12). However, this is a more complicated problem, and can potentially be dangerous because of overfitting.

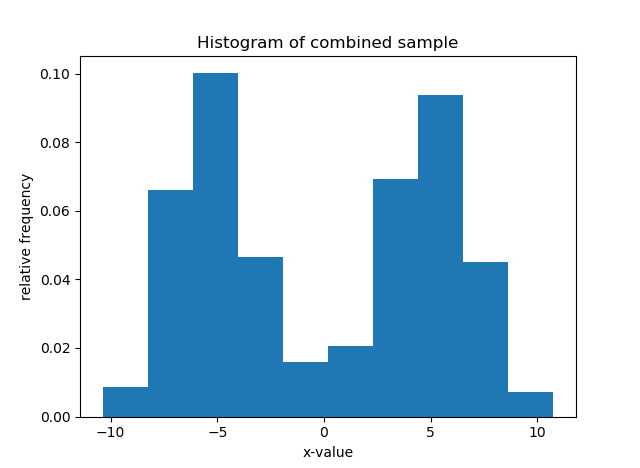

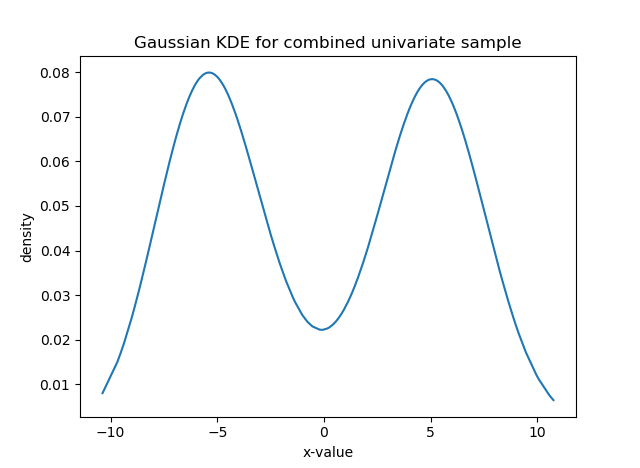

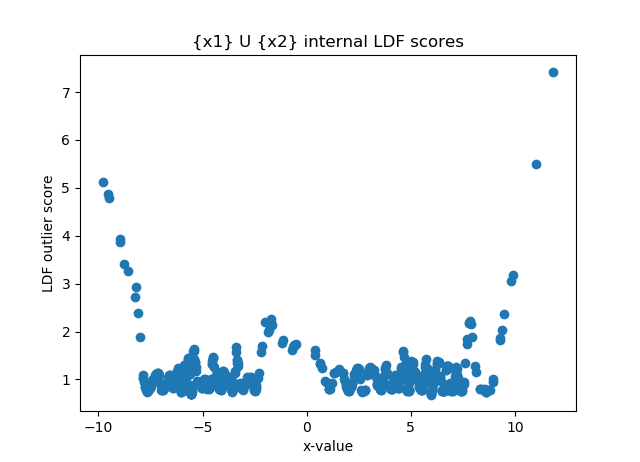

Constructing a combined density from known components

More often, you have several samples or densities that you want to combine into a single density function. This differs from the case discussed above because you already have separate groups to combine, rather than having the combination, which you want to split into meaningful or imaginary components based on the shape. If you have samples, one may think the natural approach would be just to pool the samples, perhaps with sample-specific weights, into a single histogram, for which you can compute a nonparametric density estimate (e.g., in R, the density() function or Python’s statsmodels.api.nonparametric.KDEUnivariate) see first question above, without estimating each density separately first.

However, there are many cases in which rather than pooling the data from different samples, you may want to model the density of each sample separately (either parametrically or not), and then combine them. In this way, particularly if each sample is modeled by a parametric distribution (perhaps there is a good reason to do so, such as in Bayesian applications), you estimate the overall density as a weighted mixture of the group densities. This is similar to the component mixtures discussed above, except that the density is estimated as a mixture of known components (which may be meaningful if the samples represent groups) rather than estimating the components that form a given mixture, which may not be meaningful. Typically, “mixture distribution” means estimating a density as a combination of sub-component densities, whereas we will use “combined density” to refer to a variable or density estimated as a weighted sum of random variables whose distributions are known.

Luckily, calculating a density from a weighted sum of its known components is very easy, with the crucial assumption that your component densities represent independent random variables. This is a very reasonable assumption if they represent independent sample or groups.

In general, say you have n random variables X_1,\:X_2,\dots,\:X_n. Each variable X_i has a known density function f_1,\:f_2,\dots,\:f_n, which may be of different parametric forms. For the following, we will assume each f_i is a parametric density function that can be evaluated at any value in its domain, but it can also be a density estimate at a discretization of the domain (e.g., if the domain is \[0,1\], then we estimate f_i at 0, 0.01, 0.02,\dots,1), which is assumed to be the same for all \{f_i\}. If the \{f_i\} have different domains (e.g., normal is defined on the real line, but gamma variables must be >0), construct a discretization on the union of the domains.

Let w_1,\dots,\:w_n be a vector of normalized weights for these n variables, where in the default case each w_i=1/n. Then, we can form a random variable X=\sum_{i=1}^n w_i X_i, which is the weighted mean of the variables, and its density is likewise represented by the weighted sum of the densities, f_X=\sum_{i=1}^n w_i f_i. Its mean \mu_X and variance \sigma_X^2 can be easily calculated as a weighted sum of the means (\mu_i) and variances (\sigma_i^2) of the n random variables, as \mu_X=\sum_{i=1}^nw_i \mu_i and \sigma_X^2=\sum_{i=1}^n w_i^2 \sigma_i^2. This is true for any independent random variables by the linearity property of the mean and variance (see http://www.stat.yale.edu/Courses/1997-98/101/rvmnvar.htm).

While the weighted mean X will always have the mean and variance above, in certain cases it will also have a known parametric form. For instance, if each X_i\sim\text{N}(\mu_i, \sigma_i^2) and they are independent, then X\sim\text{N}\left(\sum_{i=1}^n w_i\mu_i, \quad \sum_{i=1}^n w_i^2 \sigma_i^2\right), as above. Other random variables, such as Gamma, have similar, though more restrictive, such properties (see examples for different distributions). More likely than not, the expression f_X=\sum_{i=1}^n w_i f_i will not have a single parametric form.

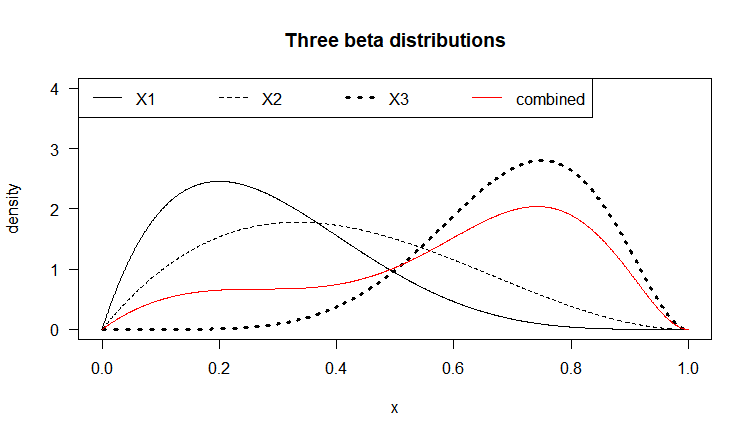

Calculation example with Beta distributions

Let each X_i be a Beta-distributed random variable (with domain between 0 and 1), which is defined by two parameters \alpha_1 and \alpha_2 (also denoted \alpha and \beta, in R called shape1 and shape2). Below we graph three (n=3) Beta distributions with different parameters, in black. Say we have a weight vector w_1=0.2, \:w_2=0.1,\: w_3=0.7 (note \sum_{i=1}^{n} w_i=1), representing the relative importance of these variables. The combined density function, plotted in red, represents the weighted average of these three variables. The bumpiness of the combined density depends both on the relative density values of the f_i at given values of the domain, as well as the values w_i of the weight vector.

#weight vector

w <- c(0.2, 0.1, 0.7)

#discretization of the domain space [0,1]

dp <- 0.001

p <- seq(0, 1, by=dp)

plot(x=p, y=dbeta(p, shape1=2, shape2=5), type="l",

main="Three beta distributions", ylab="density", xlab="x",

ylim=c(0,4), las=1)

lines(x=p, y=dbeta(p, shape1=2, shape2=3), lty=2)

lines(x=p, y=dbeta(p, shape1=7, shape2=3), lty=3, lwd=3)

legend("topleft", lty=c(1:3,1), lwd=c(1,1,3,1), col=c(1,1,1,2),

legend=c(paste("X", 1:3, sep=""), "combined"), horiz=TRUE)

#combined density expression as sum of individual betas

comb_dens <- function(p) {

w[ 1 ]*dbeta(x=p, shape1=2, shape2=5) +

w[ 2 ]*dbeta(x=p, shape1=2, shape2=3) +

w[ 3 ]*dbeta(x=p, shape1=7, shape2=3)

}

#plot combined density

lines(x=p, y=comb_dens(p), col="red")

#check it approximates a density

sum(comb_dens(p)*dp)

#0.9999994

Since the function f_X=\sum_{i=1}^n w_i f_i, similarly its value at any point 0\leq x\leq 1 is f_X(x)=\sum_{i=1}^n w_i f_i(x). This is an exact expression, not an approximation. It cannot be simplified further, since unlike the other distributions, there is no parametric expression for a sum of Beta distributions with arbitrary hyper-parameters (clearly the red curve above is not Beta-distributed, for instance). The approximation comes when we discretize the domain \[0,1\] with gap \Delta=0.001 at x=0,\: 0.001,\: 0.002,\dots,\: 0.999,\: 1. When we conduct calculations, say an integral over the combined density function f_X, approximated by a discrete (Riemann) sum with bin width \Delta, we should get approximately 1 (densities must sum or integrate to 1 across their domain). These calculations will be good approximations, if we have specified a fine enough discretization, and that the density is not too bumpy/sharp or approaching infinite values (asymptotes) anywhere in the domain (for Beta, this can happen at the domain endpoints).

comb_dens <- function(p) {

w[ 1 ]*dbeta(x=p, shape1=2, shape2=5) +

w[ 2 ]*dbeta(x=p, shape1=2, shape2=3) +

w[ 3 ]*dbeta(x=p, shape1=7, shape2=3)

}

Application to estimating combined success probability

What is an application for this? Say that you are a gambler playing three different games: (1) poker, (2) blackjack, and (3) roulette. Ignoring how much money you win at each game, let’s just consider your win probability distribution for each.

(Side note: Each time you play a game, you either win or lose, so it’s a binary random variable. Instead of simply taking the empirical proportion (\hat{p}=\frac{\&hash;&space;\text{wins}}{\&hash;&space;\text{times&space;played}}) as an estimate for the unknown true win probability p, typically p is treated as a random variable and its distribution is estimated. In many cases, p is estimated by a normal distribution centered around \hat{p} (by the Central Limit Theorem), but since we must have 0\leq p\leq 1 by definition, in Bayesian settings usually the Beta distribution is used, along with a prior distribution on p. This is more sensible since it works with samples of any size (unlike the normal approximation, which is only accurate above a certain minimum), and the domain of the Beta (\[0,1\]) matches the range of values of p, which it is trying to estimate, unlike the normal, whose domain is all real numbers.)

Let X_1,\:X_2,\:X_3 be random variables, whose distributions express our uncertainty of the win probabilities p_1,\:p_2,\:p_3 of each game. p_1,\:p_2,\:p_3 are unknown, but can be estimated by playing each game multiple times and counting the relative numbers of wins/losses for each. X_1,\:X_2,\:X_3 will be modeled as Beta-distributed, as noted above. Each time a game is played, the hyper-parameters \alpha_1 and \alpha_2 of each X_i are increased; this means that the more we play a given game, the more information and certainty we have about the respective p_i, and the variance of X_i will decrease. Thus, the density f_i of X_i depends not only on the relative proportion of wins/losses in each game, but also tends to get more concentrated the more you play. The image shows that since X_3 has more of its distribution to the right than the other two, you are more successful at roulette than poker or blackjack.

Now, given the estimated densities, the weights w_i may represent the fraction of bets played on each game (i.e., your preferences). This means, since w_i=0.7, you play 70% of all your bets at roulette. Then the combined distribution X=\sum_{i=1}^n w_i X_i would represent the distribution of your probability of winning at a randomly chosen game (given the weights). That is, since it is more likely that you will be playing roulette than a different game, the distribution of X more heavily reflects the distribution on X_3, roulette. Notice that the red density is bimodal and does not have a single parametric form, other than its expression f_X=\sum_{i=1}^n w_i f_i.

Once we have a density expression for f_X, we can do anything with it we would do with any density function. For instance, we can compare two players with different success probability distributions X_i and weights w_i, and compare them overall on their win distributions, perhaps with a distribution distance function.

Question 06: I want to construct a confidence interval (CI) for a statistic. Many CI formulas are calculated in a form similar to "\text{value} \pm 1.96\times\text{standard deviation}" When is it appropriate to use these, and how do I figure out which one to use?

Answer:

First, some background: a statistical or machine learning (ML) procedure often involves estimating certain parameters of interest. For example, in a linear regression, the values of slope parameters \beta, and the \text{R}^2 or the regression, are of interest. Confidence intervals (CIs) are often used to give additional information about the variability inherent in an estimate, in addition to just the single value (point estimate) calculated from our data. In any given situation, we treat the parameter estimate as a random quantity (since it depends on the data we have, which is some random sample of the total population of interest), and thus, they have a theoretical statistical distribution, typically symmetric around the single value estimated. Many statistical significance tests for model parameters are based on the probabilities observed in the theoretical distribution conditional on the null hypothesis being true.

For instance, in linear regression, the null hypothesis for the test of whether a dependent variable X_k is significant in the regression is \text{H}_0: \beta_k=0, that its coefficient is on average 0. To decide if X_k is significant, we see how unusual its observed slope value is \hat{\beta_k}, where “how unusual” is defined according to the theoretical distribution, if in fact the average was \beta_k=0 (that is, that there is no linear relationship). The true population slope \beta_k is the one that would be measured if we conducted a regression on the full population of al values Y and X that exist (e.g., the regression of height Y on weight X for all members of the population). The observed slope \hat{\beta_k} from our sample is an estimate of \beta_k since we only have a sample of the population values, and thus it depends on the particular sample we have. A CI for \beta_k is constructed, centered around the estimate \hat{\beta_k}, and if 0 is outside of it, we reject the null hypothesis and conclude that the slope is not insignificant, that is, that it cannot be 0. Since the statistical test and the estimated CI are based on the same statistical assumptions, we need to be aware of which assumptions are made.

In a CI, we decide on a confidence level, which is a high number like 90%, 95%, or 99% (expressed as a decimal). Our CI would express the range of values that our parameter might take, say, 95% of the time. For instance, a regression might estimate the \beta_1 slope value as being -2.76, but say that 95% of the time we expect it to be between -2.8 and -2.72. The fact that this interval is short tells us that the estimate is stable and that we can be confident in our estimate. In the hypothesis test framework, the fact that the CI does not contain 0 (the hypothesized value under the null \text{H}_0) means that the slope is significantly different from 0.

A common default confidence level is 95%. Many people are familiar with a confidence interval that looks like “x \pm 1.96\times(\text{standard deviation})”. When is it appropriate to use these, and where do these numbers come from?

The sample mean

As mentioned above, typically we treat the parameter we would like to estimate as being unknown. To know it, we would have to have access to all the data (the “population”), which because it is so vast, is treated as impossible. A few examples:

- \mu is the mean income if we considered all working adults in the US.

- p is the fraction, considering all American voters who would vote for candidate X .

- \beta_1 is the slope parameter and \text{R}^2 is the coefficient of determination we would get on the regression of Y= “salary in dollars” and X= “years of work experience” for lawyers in the US, if we somehow had a dataset of these variables for all lawyers in the US.

In statistics, we treat these population parameters as unknowable. To learn about them, we take a (representative) random sample of size N, consisting of values \{x_1,\dots,x_N\}. Typically we would calculate the sample mean (“X-bar”), \bar{X}=\frac{1}{N}\sum_{i=1}^N x_i as the sample equivalent of the population parameter. Estimates of a parameter are often denoted with a \hat{\phantom{X}} (“hat”), so we would say, for instance, \bar{X}=\hat{\mu}, that is “the sample mean is our estimate for the parameter \mu”.

The fact that here we use the sample mean is crucial, because the sample mean has very nice statistical properties. Let’s take the case of estimating the population mean \mu, which in this case could represent the average income of working American adults. Therefore, each adult X_i in our sample is one randomly-drawn instance from the distribution of random variable X = “income of an American adult”. The term “expected value”, denoted E(\cdot), means, the average we expect a random variable to have. Therefore since \mu is the mean, the expected value is always E(x_i)=\mu. Assume for now that the population standard deviation is \sigma.

The following are true, no matter what the distributional form of X is:

- E(\bar{X})=\mu_{\bar{X}}=E\left(\frac{1}{N}\sum_{i=1}^N x_i\right)=\frac{1}{N}\sum_{i=1}^N E(x_i)=\frac{1}{N}\sum_{i=1}^N \mu=\frac{1}{N}(N\mu)=\mu

- Standard deviation of \bar{X}=\sigma_{\bar{X}}=\frac{\sigma}{\sqrt{N}} (the standard deviation of an estimator or statistic, such as \bar{X}, is also called a standard error)

The first formula shows that the sample mean \bar{X} has the same expected value as the mean \mu itself. Therefore, using the sample mean \bar{X} itself as a guess for \mu is a good idea; this is why many CIs are centered around the sample mean. The second formula, which we do not prove here, says that the standard deviation of \bar{X} (remember, it is a random variable depending on the sample we get) depends on \sigma, and as we get a larger sample (N is larger), the standard deviation decreases, meaning our value of \bar{X} is more and more likely to be close to the true, unknown \mu.

The Central Limit Theorem

Note, we have not said anything about what the population distribution is. Surely, since \bar{X} is a function of values X_i drawn from this distribution, its distribution should depend on that of X, and in order to make a CI, we have to have a distribution. The equations above are true for any X’s distribution. The good news is that, due to a theorem called the Central Limit Theorem, we can say that basically as long as we have a large enough sample N (typically at least 30), that the distribution of the sample mean \bar{X} has a normal distribution, with mean \mu_{\bar{X}} and \sigma_{\bar{X}} as given above, regardless of the underlying population distribution of X.

(Technical Note: Actually, if \sigma is not known (which is a more realistic assumption), it can be approximated by the sample standard deviation s, so the standard error \sigma_{\bar{X}} is estimated as \frac{s}{\sqrt{N}} rather than \frac{\sigma}{\sqrt{N}}. Then, the variable T=\frac{\bar{X}-\mu}{s/\sqrt{N}}, which standardizes \bar{X} around the unknown mean \mu, will have a T-distribution with degrees of freedom depending on the sample size (=N-1). If N is big enough, the T-distribution converges to standard normal, and assuming it is standard normal is a good enough approximation.)

If the statistic \bar{X} has a normal distribution, which is symmetric around the mean \mu and unimodal, the best CI for it is symmetric, and given according to quantiles of the standard normal distribution. In a standard normal distribution, 95% of the probability is between (-1.96, 1.96). Since \bar{X}\sim \text{N}(\mu_{\bar{X}}, \: \sigma_{\bar{X}}), the 95% CI for \mu by \bar{X} is \bar{X}\pm 1.96\sigma_{\bar{X}} = \bar{X}\pm 1.96\frac{s}{\sqrt{N}}. As mentioned, the distribution should technically be T rather than normal in the case of the sample mean where s replaces \sigma, but the normal distribution is easier to use in calculations and is an acceptable approximation for large enough samples.

This means that any statistic that can be expressed as a sample mean of a sample has the same property. For instance, say your data X_i are binary (X_i\in \{0,1\}), such as yes/no responses to a survey question. Here, \hat{p}=E(X_i)=\frac{1}{N}\sum_{i=1}^N X_i is the observed sample proportion of X’s equaling 1. In this case, p is the population parameter (e.g., the proportion of the population of voters that will vote for a particular candidate), which is estimated by the proportion \hat{p} in the sample. Since \hat{p} can be calculated as the sample mean of the binary X_i, it also has a normal distribution, with parameters \mu_{\hat{p}}=p and \sigma_{\hat{p}}=\sqrt{\frac{\hat{p}(1-\hat{p})}{N}}. The same is true of linear regression parameters \beta_k, except that technically a T-distribution should be used, especially if there are a large number of regressors, since their T-distribution degrees of freedom (=N-K) depends both on the sample size N and number of regression parameters K. However, again, if N is big enough, the normal distribution is an acceptable approximation.

(Side notes: (1) the estimate \hat{p} itself is used to approximate the standard deviation, so sometimes instead the standard deviation formula is \sigma_{\hat{p}}=\sqrt{\frac{0.5\times 0.5}{N}}, where 0.5 is used to give the most conservative (worst-case), since it maximizes \sigma_{\hat{p}} for a given N. (2) while by definition, p\in [0,1], the normal distribution has support on all real numbers, so it’s possible that the CI bounds for \hat{p} will be outside of [0,1]. One solution to this is to use Bayesian posterior intervals based on the beta distribution, which will be restricted to the correct domain, but will not typically be symmetric.)

However, the regression R^2 statistic cannot be expressed as a sample mean of independent draws. The same thing is true for order statistics or quantiles of samples, which are not sample means. Therefore, calculating a CI for them cannot be done by assuming a normal distribution. However, bootstrap sampling can be used to conduct nonparametric inference and calculate approximate CIs for these kinds of statistics. Bootstrap sampling uses repeated re-sampling from a fixed sample, calculates the statistic value for each re-sampling, then bases the CI and inference from those. Even though the data is resampled, each sample is independent of the others, so the statistic values in each sample are mutually independent, since its value in one sample will not affect the value in another. This allows for inference to be conducted, except that the estimated variance in bootstrapping needs to be adjusted to reflect the fact that the bootstrap samples, while independent, were from a fixed initial sample and not new (non-overlapping) samples from the original population.

Summary:

- Statistics calculated from our samples, such as quantiles, mean, regression R^2, etc., are random quantities, since they depend on the specific sample collected.

- We conduct inference on these calculated values to learn about the corresponding unknown population parameters that they approximate. A typical technique is to calculate a confidence interval (CI) for the value of the population parameter, which is based on the theoretical distribution of the value of the sample statistic across multiple hypothetical samples.

- If the desired statistic can be represented as a sample mean of underlying data, then if the sample is big enough, we can use the Central Limit Theorem result to say it has an approximately normal distribution. Then, we can use simple and convenient facts about the normal distribution to calculate a CI. Otherwise, we will generally need some other technique like bootstrapping.

Question 07: I have a fixed set of (named) objects, or a fixed table of class variable levels with fixed probabilities. I want to generate a random sample with replacement from my sample or of levels of the class variable, where the resulting relative frequencies most closely match the fixed sample or probabilities. What is a good way to do this?

Answer:

Let \mathbf{x}=\{x_1,\dots,x_k\} be a set of (without lack of generality) items of distinct value; these may be (distinct) numeric values or (distinct) categorical values, say names or levels of a categorical variable. Let \mathbf{w}=\{w_1,\dots,w_k\}, w_i \geq 0 \forall i be a set of weights on the corresponding object in \mathbf{x}. \mathbf{w} can be easily converted to probabilities by dividing each w_i by the sum, in which case this represents a discrete distribution over the values \mathbf{x}, but the weights need not be normalized. We would like to draw a random sampling of size N with replacement of objects x_i in \mathbf{x} according to the weights \mathbf{w}. In the default case, all w_i are equal (w_i \propto 1/k \forall i), in which case we are drawing an unweighted random sample, the usual case.

Random sampling with replacement (RSWR) proceeds by drawing one item from \mathbf{x} on each of j=1,\dots,N turns; when we replace the drawn item, the weights w_i don’t change after each sampling. On average (assuming that the weights w_i have been rescaled so they sum to 1) the number of times x_i will be selected (which is a random variable itself) is on average N\times w_i times, with variance N\times w_i \times (1-w_i). These values come from the fact that the number of times each object is selected jointly follow a multinomial distribution. The multinomial is a generalization of the binomial distribution to more than 1 dimension, where a draw consists of a vector of k elements of nonnegative integer values that sum to N, where the values are on average N times the weight value. The frequency counts for each value x_i are dependent because the total frequency must be N. Using a multinomial function to generate draws is shorthand for drawing one value each of N times with probabilities \mathbf{w}.

The problem is that although each x_i will be selected a number of times proportional to its weight (average = N\times w_i\propto w_i), the variance can be fairly high. For example, let k=3, \mathbf{x}=\{x_1,x_2,x_3\}, where x_1=0, x_2=1, x_3=2. Let standardized weights be w_1=0.27, w_2=0.13, w_3=0.6. Let us make N=53 draws (chosen purposely so N\times w_i is never an integer). The average number of draws for each is shown below:

x = [0,1,2] w = [0.27, 0.13, 0.6] N = 53 #expected number of draws for each expected = [N*ww for ww in w] print(expected) #[14.31, 6.890000000000001, 31.799999999999997]

Let us conduct a large number of iterations of this process and tally the results:

import numpy as np num_iter = 1000 #generate 1000 vector draws of size N with multi_draws = np.random.multinomial(n=N, pvals=w, size=num_iter) #display the first several rows multi_draws[:8,] #each row of the following is a 3-element frequency vector summing to N=53 #in total there are num_iter=1000 rows #array([[20, 1, 32], # [14, 3, 36], # [13, 8, 32], # [21, 4, 28], # [11, 7, 35], # [15, 8, 30], # [10, 8, 35], # [13, 4, 36]]) #calculate summary statistics: mean, variance, min, and max #mean np.apply_along_axis(arr=multi_draws, axis=0, func1d = np.mean) #array([14.32 , 6.791, 31.889]) #the above are very close to the expected values [14.31, 6.890000000000001, #31.799999999999997] np.apply_along_axis(arr=multi_draws, axis=0, func1d = np.var) #array([ 9.9236 , 6.115319, 12.452679]) #theoretical frequency variance: #[N*ww*(1-ww) for ww in w] #[10.4463, 5.994300000000001, 12.719999999999999] np.apply_along_axis(arr=multi_draws, axis=0, func1d = np.min) #array([ 6, 1, 19]) np.apply_along_axis(arr=multi_draws, axis=0, func1d = np.max) #array([24, 18, 44])

In the above simulation, the average frequencies are as expected. However, the variances are very high. For instance, x_2, which had the lowest weight, is drawn at one point 18 times (33% of 53, when we expect it to be only 13%). Obviously, the large variance and gap between the minimum and maximum are related to the fact that we made a large number of draws, whereas on a single draw, the frequencies should be closer to the expected value. In many cases, however, we want the selection frequency of each item selected to be as invariable as possible, say, within the expected value N\times w_i rounded up or down.

A good technique for this is called low variance sampling (as opposed to the above, which had high variance). One version of this was proposed by James Edward Baker in his article “Reducing bias and inefficiency in the selection algorithm”, from Proceedings of the Second International Conference on Genetic Algorithms on Genetic Algorithms and Their Application (1987). A short function for this is as follows:

def low_var_sample(wts, N): # ex: low_var_sample(wts=[1,4],N=2) xr = np.random.uniform(low = 0, high = 1 / N, size =1) u = (xr + np.linspace(start=0, stop=(N - 1) / N, num=N)) * np.sum(wts) cs = np.cumsum(wts) indices = np.digitize(x=u, bins=np.array([0] + list(cs)), right=True) indices = indices -1 return(indices)

Now let’s repeat low-variance sampling for N times, and show a few examples:

lvs = [low_var_sample(wts=w, N=N) for ii in range(num_iter)] lvs_freqs = [[list(lv_draw).count(ii) for ii in [0,1,2]] for lv_draw in lvs] np.array([lvs_freqs[ii] for ii in range(5)]) #notice that the frequency counts vary only by one up or down #array([[15, 6, 32], # [15, 7, 31], # [14, 7, 32], # [14, 7, 32], # [15, 6, 32]]) np.apply_along_axis(arr=lvs_freqs, axis=0, func1d = np.mean) #array([14.319, 6.885, 31.796]) np.apply_along_axis(arr=lvs_freqs, axis=0, func1d = np.var) #array([0.217239, 0.101775, 0.162384]) [N*ww*(1-ww) for ww in w] #[10.4463, 5.994300000000001, 12.719999999999999] np.apply_along_axis(arr=lvs_freqs, axis=0, func1d = np.min) #array([14, 6, 31]) np.apply_along_axis(arr=lvs_freqs, axis=0, func1d = np.max) #array([15, 7, 32])

Notice that the mean frequency counts are close to what is expected (14.31, 6.890000000000001, 31.799999999999997), but this time their variance is much lower. We see that the min and max frequencies observed only differ by 1, (e.g., between 14 and 15), so the observed numbers will essentially be the expected values rounded either up or down.

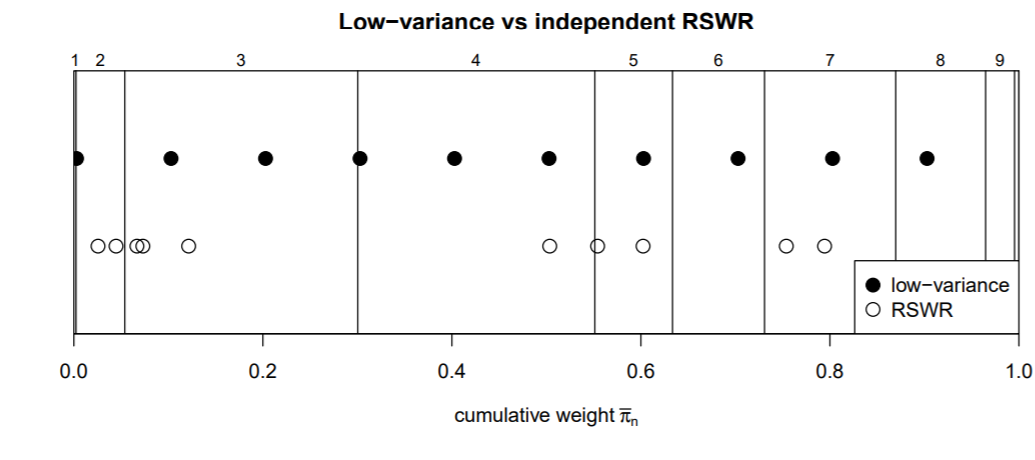

Here we explain the logic behind these results. The following illustration shows a different example with k=N=10 and different weights). An analogy is trying to throw darts at a dartboard such that the fraction of darts in each zone corresponds to the overall area fraction of each zone. RSWR corresponds to, say, N=1000 iid dart throws (with the same probability distribution of landing in a given place), which, if the distribution is not uniform on the space, will tend to be concentrated in given areas. Even if the distribution is uniform, as we have seen, since the draws are independent, there will be a fair amount of overall variability in repeated sets of 1,000 throws. Low variance sampling corresponds to throwing the darts in as fine and evenly-space a grid of locations as possible (that is, so the locations are not iid), to cover the board evenly.

The following rectangle has width 1. The partition into ten rectangles (vertical lines) is done so the width of rectangle i is proportional to weight w_i. Low variance sampling spreads the N draws across the interval [0,1] in an equally-spaced manner, since the draws are done “together” rather than independently as in RSWR. The only random element is an initial draw of xr uniformly on [0, 1/N], after which the remaining N-1 points are equally spaced with gap \frac{N-1}{N^2} (linspace function). The cumsum then maps these points to the bins representing the weights, so that we expect on average the frequency of points in the bins to be proportionate to the weight, and with low variance.