The future of analog AI hardware

New research from IBM presented at this year's IEDM conference shows that a future where we can train and run AI systems on energy-efficient analog hardware is on the horizon.

New research from IBM presented at this year's IEDM conference shows that a future where we can train and run AI systems on energy-efficient analog hardware is on the horizon.

Since the dawn of the computer era, the world has been working on precise computer chips. The digital world, as we know it, is the result of endless 1s and 0s, constant sums processed on chips with definite answers. But what sorts of computations can be done where being precise is less important?

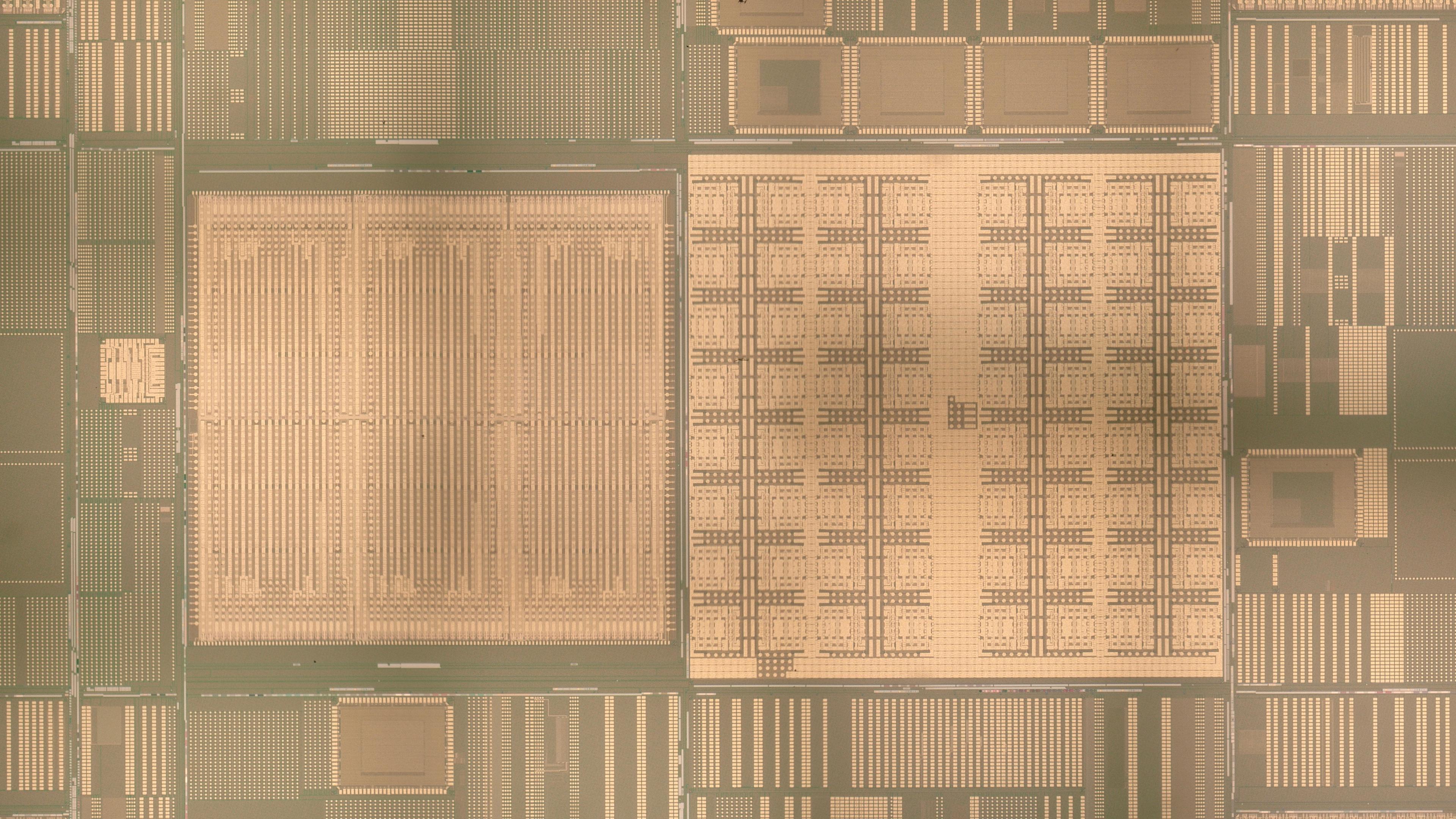

In computing, much of the time and energy that is spent in processing is spent shifting electrons back and forth between a device’s processor and memory. For years, researchers at IBM have been working on developing analog in-memory computer chips, where the computing is carried out in the memory itself. The goal of these chips is both to save energy, and build devices that could be used for training and inferring with AI systems.

What we’ve used computers to calculate has always needed to be precise. You can’t guess the flight path of a rocket, or hope your tax software will just figure out what you’re supposed to pay that year. But there are some things in life that don’t have to be quite as accurate. If you learn how to drive in one country, for example, you know that you’ll likely be able to figure out what a stop sign looks like in another country even if you’ve never seen one.

At this year’s IEEE International Electron Devices Meeting (IEDM), IBM researchers are presenting work that details how future efficient analog chips could be used for deep learning, both for training and for inference.

When building AI systems, you have to train a model on data. When first training the model, the model’s ability to infer what you’re after, whether that’s recognizing cat photos or finding new drug ideas, is poor. You likely have to run the model, tweak its weights based on your results, and then run the model again, repeating until it has a level of accuracy that you’re after. As such, it’s unsurprising that running inference on an already-trained model is a little easier than training a model from scratch.

But it’s not a task without its challenges. Researcher Julian Buechel presented a paper at IEDM that a team of researchers at IBM have been working on how to accurately map model’s weights onto an analog memory chip for running inference tasks.

The team’s work has shown that Phase-change memory (PCM) is an emerging non-volatile memory technology that has recently been commercialized as storage memory in computer systems.phase-change memory devices have the potential to be used to map a neural network’s synaptic weights into analog conductive device values. These conductance values need to be accurate, and in the past, researchers have had to send an electrical pulse into each cell of a device to figure out how it was weighting, which can be time consuming. When read back, each cell doesn’t output a lot of current, which also means there’s a high chance for error when trying to determine their weights.

Instead of reading each cell, the team tested whether it would be possible to instead read all the cells in a layer of a neural network at once. After all, what matters is whether the overall accuracy of the matrix-vector multiply operation corresponds to each layer, not the accuracy of a single cell, which can falter in the time between measuring one device and the next, or have minor aberrations in resistance between one cell and the next. With the group’s method, each of the layers of a model can be tested in parallel, and he found out that in tests on neural networks (such as ResNet9 on CIFAR10), that the accuracy of the model running on analog hardware was higher and more precise than past efforts on analog hardware. The method is also technology-agnostic.

While this method is far from perfect yet, it’s a key step towards getting the inference accuracy closer to digital accelerators in the goal of eventually commercializing analog in-memory computing chips.

Creating systems that can train AI is a far more challenging task than building ones that can infer. IBM researchers led by Takashi Ando, collaborating with Tokyo Electron (TEL), a partner company at the AI Hardware Center in Albany, have been working on how to train AI on analog hardware. When training an AI model, you feed data into the network, classify it, and then back-propagate the error through the network to fine-tune the weights. (Inference is in effect a subset of training where you just use the classification part of the training journey.)

Fine-tuning a model using a conventional algorithm requires a device with a perfectly symmetrical conductance change to accurately update the weights, but no device like this currently exists. Researchers in the field tend to use existing technology, like conventional ReRAM devices, but the IBM team tried something different. The team took a full-stack approach, co-optimizing the algorithm and the hardware in concert. This is the first known work applying both algorithm and hardware customized for AI training on the state-of-the-art CMOS technology.

When training a neural network with analog hardware, you’re looking for the gradients of the error function; if they’re steep, you update the weight by a large amount. Conventionally, gradient and weight information are stored in the same analog device, resulting in stringent requirements on device symmetry. The team has been working on an algorithm called Tiki-Taka (yes, like the soccer style of constant passing back and forth) designed to relax the symmetry requirements by separating gradient and weight information into two different systems.

For the experiments, the team has been customizing a 14 nm CMOS-based ReRAM array to test their ideas. Using a simulation based on statistical data from the ReRAM array, researchers found that they can get 97% accuracy (floating point accuracy 98.2%) for a three-layer, fully connected deep neural network (DNN) on MNIST data by optimizing the ReRAM materials and Tiki-Taka algorithm at the same time. TEL is helping development of deposition and etching processes for the novel ReRAM materials, using its manufacturing prowess and long history of close partnership with IBM Research in Albany. More work is needed to achieve floating-point accuracy for large DNNs, but Nanbo Gong, who presented the work at IEDM, said the team identified pathways to get there.

With both efforts, IBM Research is getting closer to a future where we can train and run AI systems on energy-efficient analog hardware.

Notes

- Note 1: Phase-change memory (PCM) is an emerging non-volatile memory technology that has recently been commercialized as storage memory in computer systems. ↩︎