IBM is infusing robots with AI to monitor critical systems at the edge

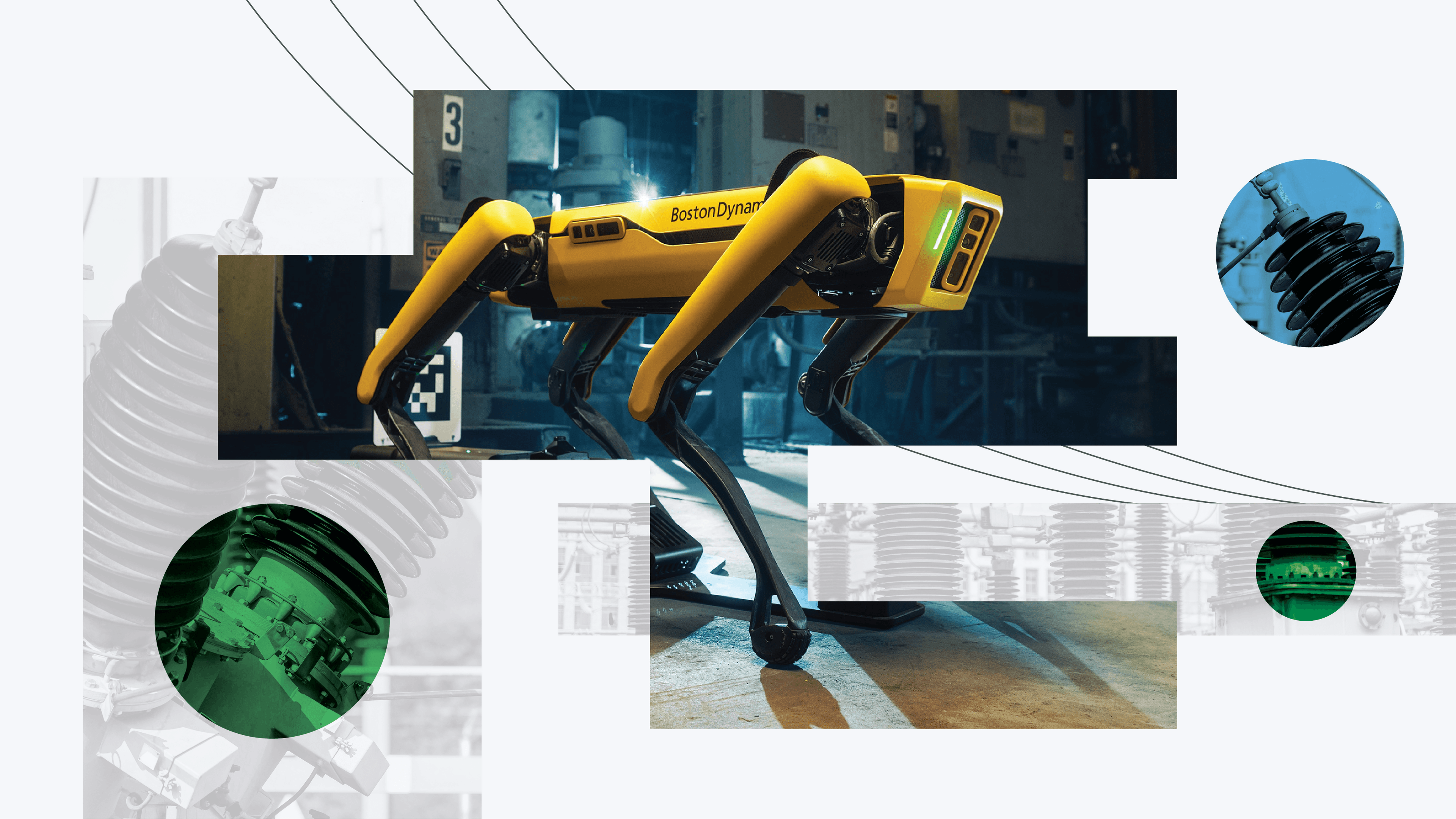

Combining the power of AI, remote sensing, and edge computing, IBM is working with National Grid and Boston Dynamics to transform how facilities can be monitored for safety issues autonomously.

Combining the power of AI, remote sensing, and edge computing, IBM is working with National Grid and Boston Dynamics to transform how facilities can be monitored for safety issues autonomously.

Inspections can be dull, dirty, and dangerous, and yet they’re critical for maintaining business equipment and operations.

The need to monitor facilities remotely came to the forefront during the pandemic and changing workforce – humans simply were not available to do inspections. That turned robots from an interesting novelty into a powerful tool to automate these inspections.

Robots can cover areas that are dangerous or inaccessible to humans, from electrical substations and chemical plants to mines and oil rigs — at any time of day. They can also upload their findings instantly: leveraging AI and edge computing for local inference to reduce data movement and storage costs. Companies no longer have to wait for a clipboard of inspection results to be transcribed into a database, be evaluated and corrective action be taken. All of this can now be automated with robots and an integrated edge AI software stack.

In the case of electrical substations, the U.S. has seen the average hours customers experience an electrical outage increase from two hours per year to eight hours per year. Climate change has played a part in this, but it’s also the case that substations are often only inspected every other month. Power companies can be missing vital information on their facilities, like issues with overheating, vibration, or cracks. Add a massive storm into the mix and a burgeoning issue can balloon into a catastrophe. When there are issues, it might also be too difficult for a human to inspect a facility and work to fix the problem. If, for example, there are high winds, a human can’t inspect an outdoor facility, and so might miss key data on what is vibrating or coming loose under those conditions, compared with inspecting equipment on a calm day.

Getting consistent, regular inspection data, at any time of day and whatever the weather, can be used in predictive analytics to help prevent problems from occurring. IBM Research is creating AI to detect those hot spots, cracks, and other issues that can cause outages. We’re building object detection combined with optical and thermal imaging to determine what small component is the root of the problem. This helps people come prepared with the right equipment and parts to do repairs, and not spend the time assessing what is broken.

At IBM Research, we’ve been working on how to take the power of a device like Spot – the mobile robot from Boston Dynamics – and use it to solve real problems that companies are facing. We’re integrating our thermal and visual AI inference into Spot’s payload and building AI models that would allow the robots to read analog gauges and interpret thermal images to detect problems.

Enabled by these AI and hybrid cloud innovations from IBM Research, IBM Consulting has developed an end-to-end solution that integrates with Spot, to detect and immediately notify staff to correct the issue. This offering, Robotics Solution for Asset Performance, is being used by companies like National Grid to actively test the technology and show the value. IBM Research developed and validated the thermal imaging and gauge reading models with two major IBM clients, including National Grid.

By turning autonomous devices like Spot into roaming edge devices, we can show the value in having robots take on dangerous, yet critical tasks, to get data vital to business operations. Some research challenges remain, however, including ensuring that models running on these robots are constantly improving, and that all the models needed for inspections are managed properly from a distance.

An AI model’s accuracy is directly correlated to the quality of data used for the training set, which can be tied to the variability in data from one day to the next. As a result, we’ve developed a system called Out-of-Distribution Detection (OOD) to automatically determine the suitability of an image for training. Images can be out of focus or simply not representative of the outcome the model is trying to produce. This technique can also be applied after a model has been created to detect changes in the stream of data the model is taking in, and to react to those changes to prevent false positive reading.

One example of OOD in use, could be if a supply chain interruption causes a manufacturer to have to switch to a new supplier that makes a similar product. The parts supplied were subtlety different, something as minor as being a slightly different color. If a component had to be replaced in a substation, it might look a little different from the original. An AI model without OOD would flag the new part as defective and cause a costly error. OOD will reduce the prevalence of indicating these subtle differences as false positives.

“With Spot and with AI capabilities, it allows National Grid to inspect critical electrical and gas facilities quickly and thoroughly, while allowing staff to perform other critical duties. We’re able to be more efficient with our time,” said Dean Berlin, Lead Robotics Engineer at National Grid. “But more importantly, we’re able to conduct the work safely. The robot can enter dangerous, highly electrified areas where humans cannot go, unless we shut down a station. This lets us monitor areas routinely without costly shutdowns.”

As AI scales into new industries, ensuring the right model is being used for the right problem at the right time is critical for clients aiming to automate their operations. There can be drastic variations in the conditions at a single assembly line, from new defects, changing environments, or lighting. AI models need to be dynamic, constantly retraining with new data. When a single model is updated, organizations need to ensure that the updates are sent to every edge device on their system, otherwise defects will be missed, and false positives will occur. Dealing with a system like this requires a hub and spoke management model.

The hub, located either on premises or in the cloud, is where the models are aggregated, retrained, and redeployed to the right edge devices, each of which are individual spokes. The spokes can send information back to the hub as well. One spoke, such as a Boston Dynamics Spot, could detect a new anomaly, capture data, and send it to the hub. The data can then be labeled by an expert, and then used to retrain the model. The new model is then deployed to all identical devices in the ecosystem.

These edge devices don’t all have the same levels of compute power or memory capacity. We’re also developing techniques to automatically send a model to a device like a Spot, for example, when it’s in a certain location, and when the inference is done, remove the model from the machine to save space.

With all our research, we need to ensure that data can be trusted, is relevant to the problem at hand, keeps the model performant — and stays secure. Research is focusing on innovations to ensure data can be trusted. We’re also working on ways to monitor any unusual behavior on an edge device, such as sending or receiving data it shouldn't be.

Research’s advancements in AI and edge computing are bringing new possibilities to solving clients’ problems cost effectively, with low touch ease of use, and high impact to productivity and safety improvements.